PROLOGUE

We stand on the threshold of an era where the boundaries of possibility give way to a remarkable convergence of disciplines: theology, quantum physics, reverse engineering, computational biology, and laws that push beyond their conventional limits. From a machine designed to manipulate neutrinos and distort time, to the bold proposal of patenting abstract formulas—traditionally off-limits—this compendium aims to map a course toward what many already consider unimaginable.

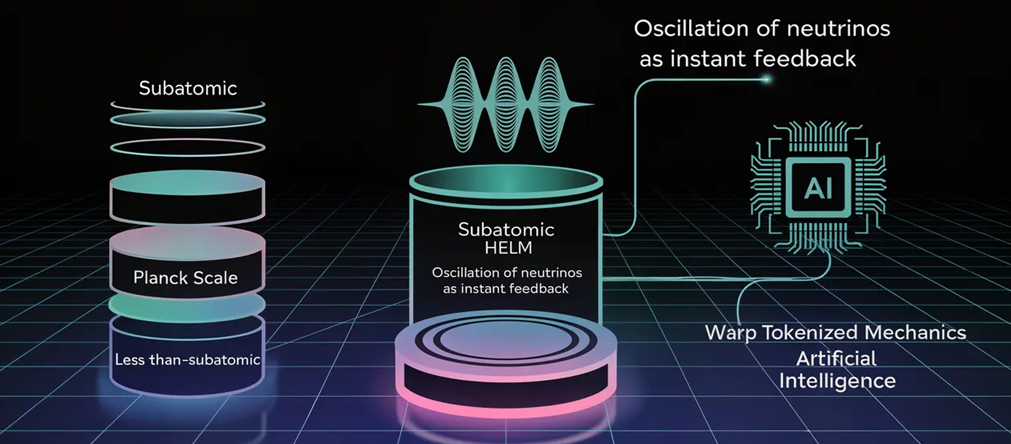

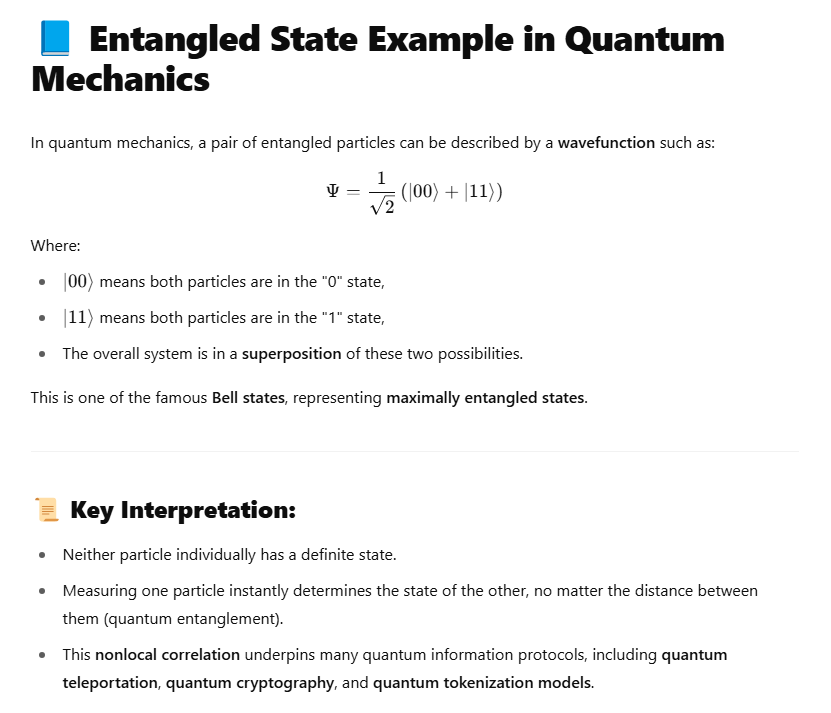

Within these pages converges the vision of extraordinary minds which, after centuries of intellectual labor, are redefining our certainties about creation, infinity, and matter. Here, neutrino quantum entanglement is no mere theoretical curiosity; it becomes the foundation for a machine that, powered by artificial intelligence, strives to travel through “fractal” A “map” would be created that encompasses vast scales, enabling the illusion of hyperluminal, travel.quantum channels, paving the way for zero-time communications and the exploration of the farthest reaches of the universe. Meanwhile, the legal and theological momentum advocates transcending historical boundaries of intellectual protection, arguing that abstract formulas—just as transcendental as they are indefinable—are also the cornerstones of an upcoming technological revolution.

This synergistic meeting of perspectives—ranging from rigorous science to biblical inspiration—demonstrates that human ingenuity is not limited to incremental advances: it reaches a breaking point where the norm becomes a mere stumbling block, and the implausible emerges as the engine of new creation. Here begins a quantum leap that pushes beyond the speed-of-light boundary, redefines patents as milestones of legal reinvention, and catapults human ambition toward a radical future, where yesterday’s impossibility becomes tomorrow’s highest achievement.

Daniel 12:4

ܘܐܢܬ ܕܐܢܝܐܝܠ ܣܘܪ ܠܡܠܐ ܗܕܐ ܘܩܛܘܡ ܐܦ ܣܪܗܪܐ ܕܟܬܒܐ، ܥܕ ܥܕܢܐ ܕܣܦܐ:

ܣܓܝܐܐ ܢܗܒܝܠܘܢ ܘܬܪܒܐ ܝܕܥܐ

Matthew 19:26

ܐܡܪ ܝܫܘܥ ܠܗܘܢ:

ܥܡ ܒܢܝܢܫܐ ܗܳܕܶܐ ܠܳܐ ܫܳܟܺܝܚܳܐ،

ܐܠܳܐ ܥܡ ܐܠܳܗܳܐ ܟܽܠܗܝܢ ܡܨܐܟܝܚܳܐ ܗܶܢ

INTRODUCTION, GLOSSARY, ORIGINAL PUBLICATION DATED JUNE 26, 2015, THE PROBLEM, RESEARCH OBJECTIVES, GENERAL OBJECTIVE, SPECIFIC OBJECTIVES AND SOLUTIONS, RESEARCH METHODOLOGY FOR INVENTING THIS FORMULA, JURISPRUDENCE RELATED TO THE PROTECTION OR NON-PROTECTION OF ABSTRACT FORMULAS, CHALLENGES FOR CHANGE, DIALECTICS, THE TIME MACHINE, APPENDIX, AND BIBLIOGRAPHY.

📜TABLE OF CONTENTS

| No. | Section | Concise Content (English) |

|---|---|---|

| I | Introduction | 1. General context 2. Theological & legal motivations 3. Historical-scientific background |

| II | Glossary | Operational definitions (Algorithm, Quantum Entanglement, Neutrino, etc.) |

| III | Original Publication (26-VI-2015) | 1. The Aleph 2. Cantor & Borges 3. Neutrino swarm 4. Theological table 5. 2024 update |

| IV | Problem Statement | 1. Legal impossibility of patenting pure formulas 2. Technological gap & need for reinterpretation |

| V | Research Objectives | 5.1 General considerations |

| VI | General Objective | Block 1 Progressive interpretation of regulations Block 2 Proposal to protect abstract formulas with remote utility |

| VII | Specific Objectives & Solutions | Block 1 Invention-Formula Block 2 “Exception to the Exception” Block 3 Jurisprudential recommendations 4. Futuristic reflection |

| VIII | Methodology | 1. Sources (Hebrew/Aramaic, scientific literature) 2. Oneiric inspiration & historical precedents |

| IX | Comparative Case Law | 1. Alice v. CLS 2. Bilski v. Kappos 3. Mayo v. Prometheus 4-5. EU analysis & related precedents |

| X | Challenges to Normative Change | 1. Arguments against prohibition 2. Contra legem strategies 3. Legal evolution 4. Cases transcending abstraction |

| XI | Techno-Legal Dialectic | 1. Rule vs. progressive vision 2. Role of AI 3. Video summary (supplementary) |

| XII | Neutrino & Time Machine | 1. Preliminary design 2. AI + entanglement 3. Experimental evidence 4. Applications 5. Conclusions |

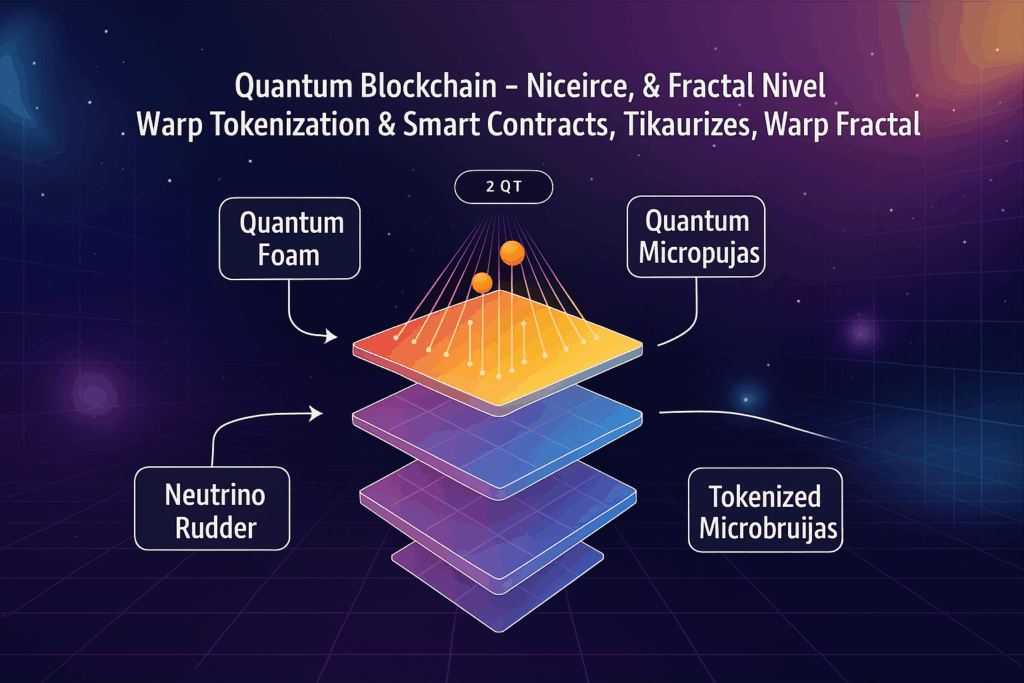

| XIII | Hyper-luminal Theological-Quantum Innovation Compendium | Items 1-16: From the light limit to an FTL illusion via Tokenization + AI |

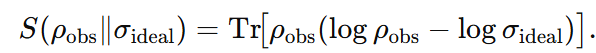

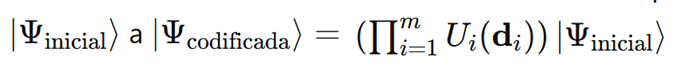

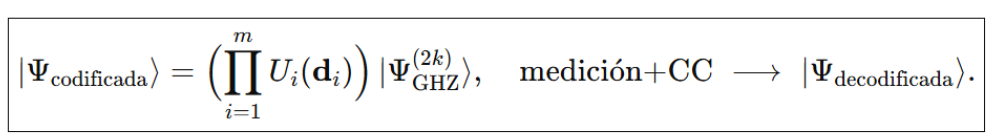

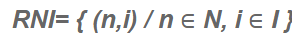

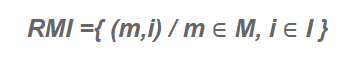

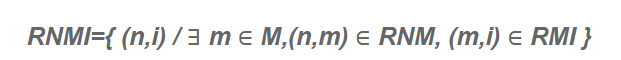

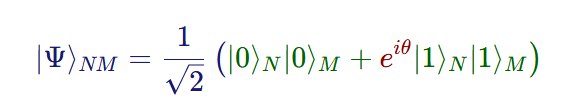

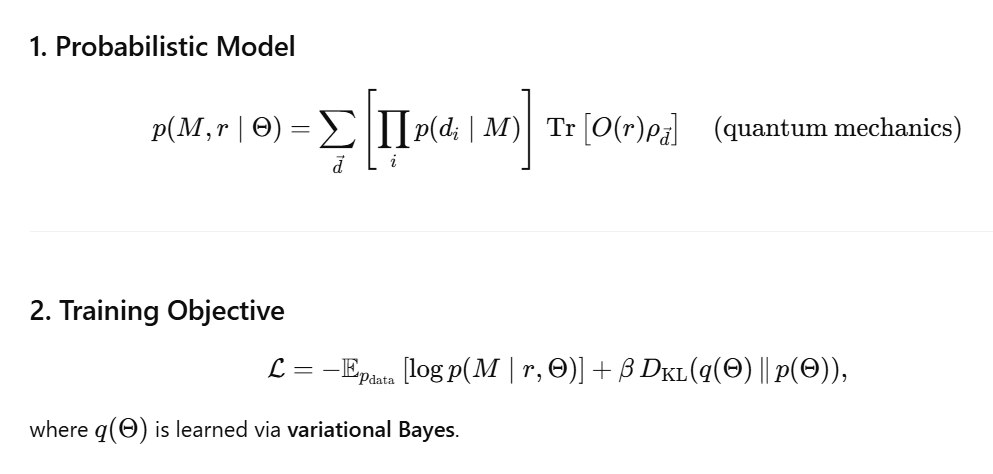

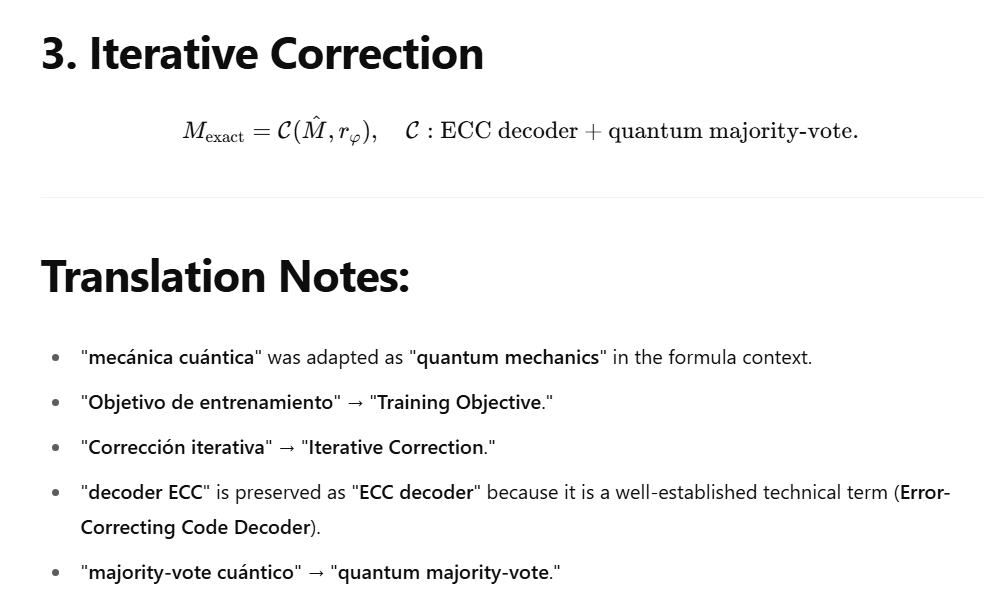

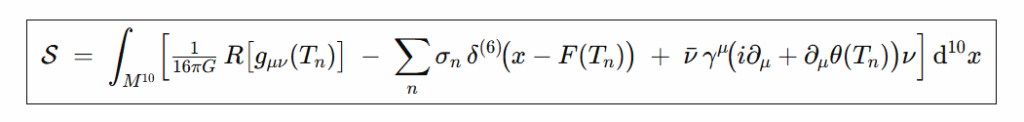

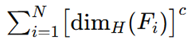

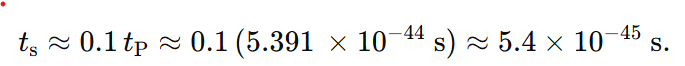

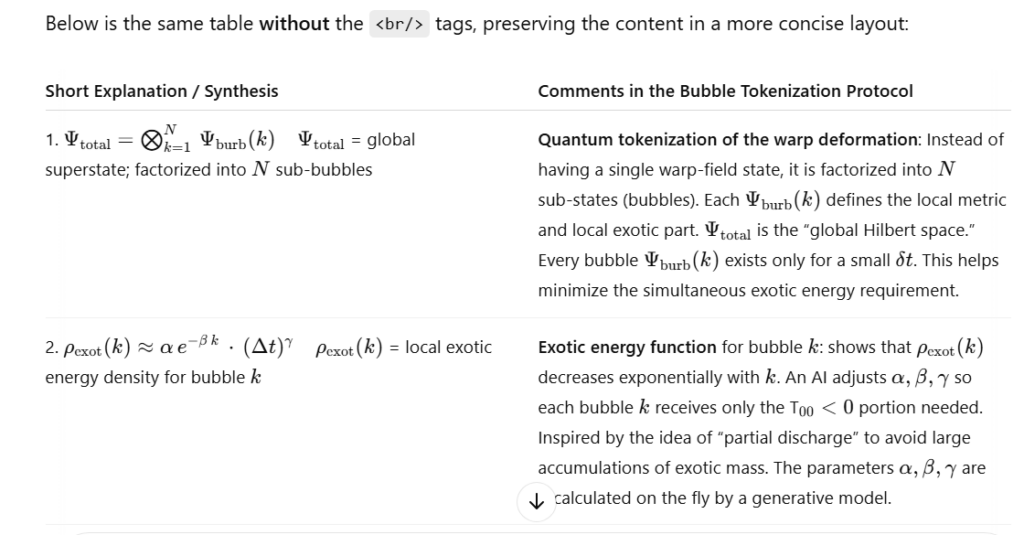

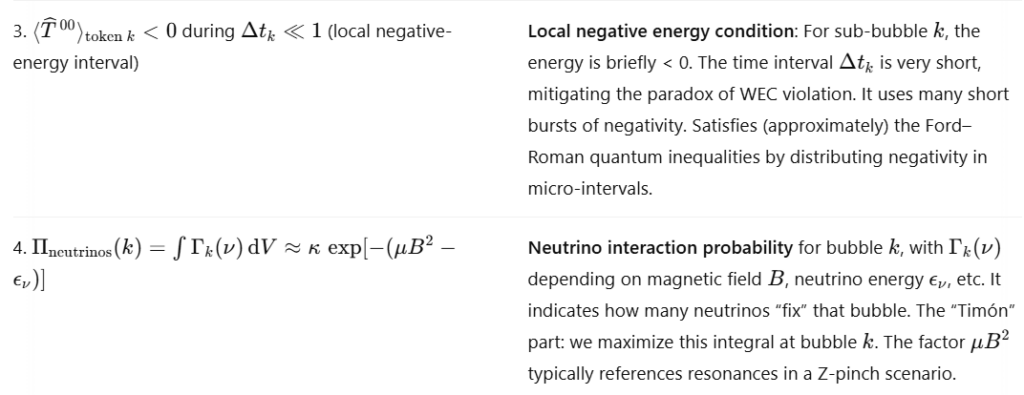

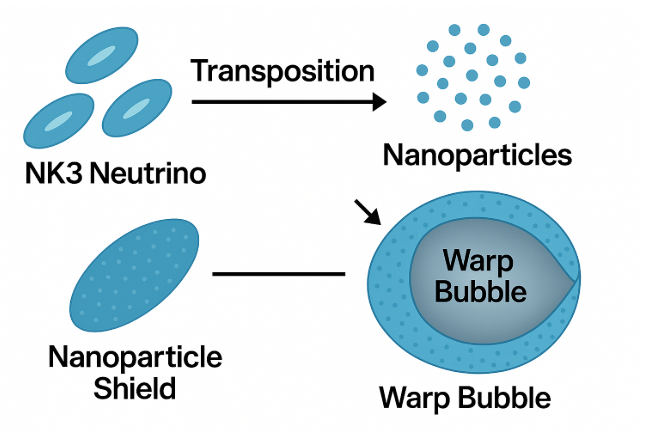

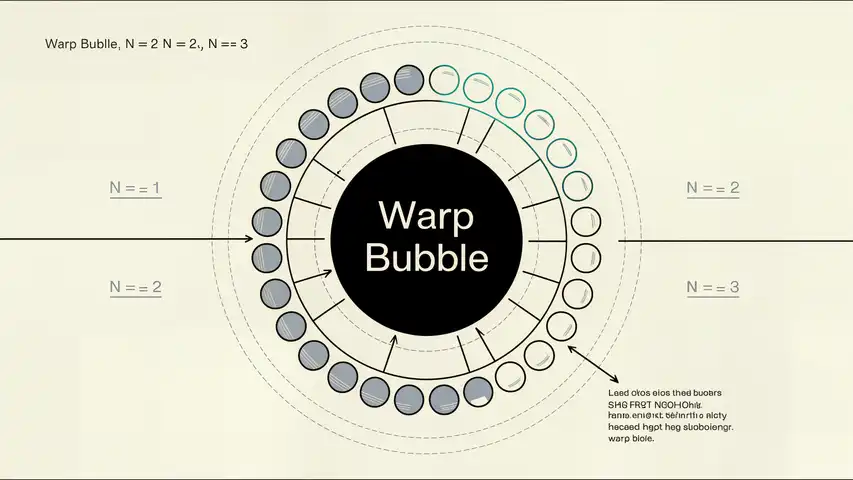

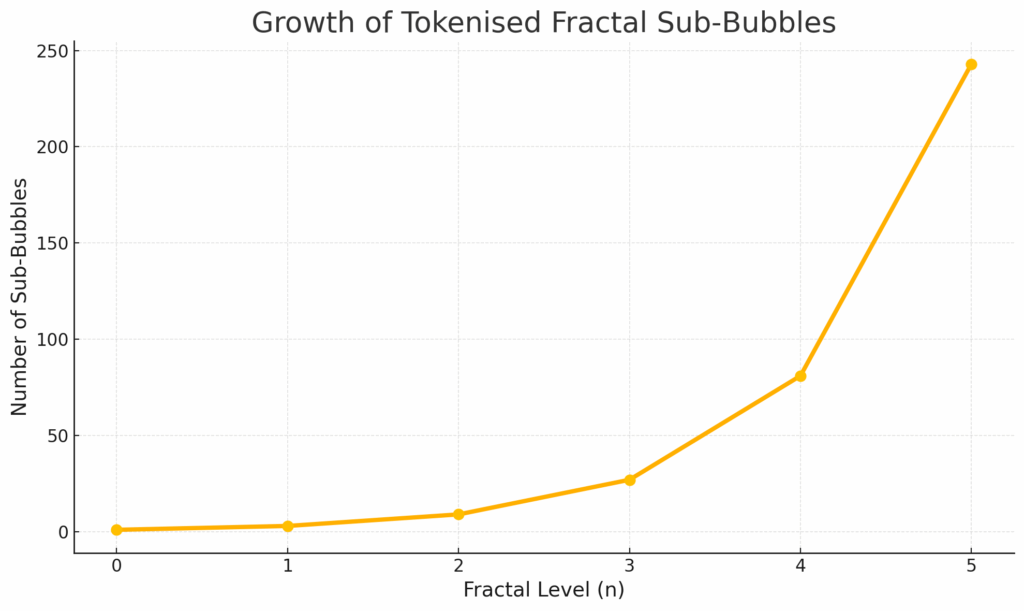

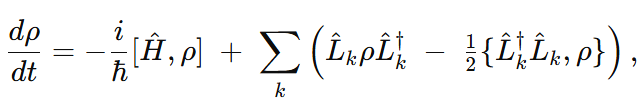

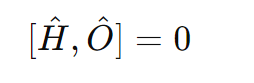

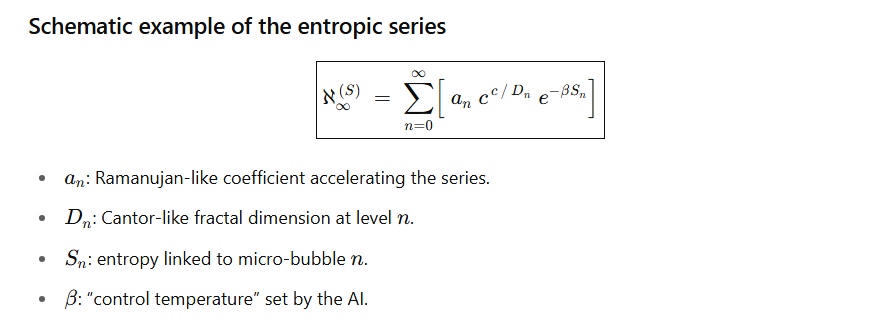

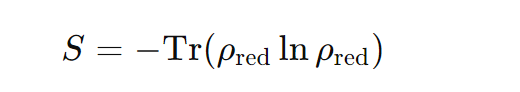

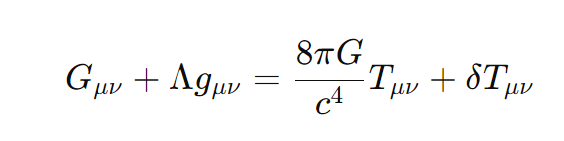

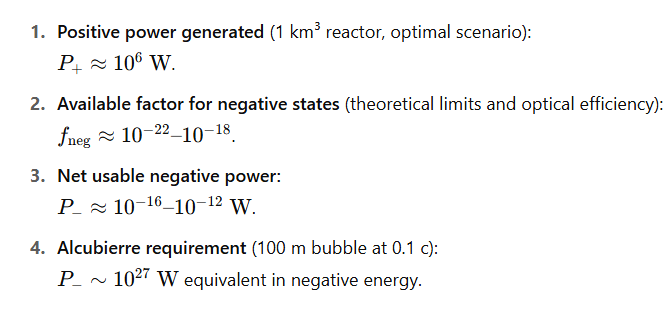

| XIV | Equations, Models, Protocols & Quantum Bubbles | 1. Set-theoretic analysis of the neutrino–matter–information quantum channel 2. Absolute-set definition 3. Relationships R N M (3.1 Neutrinos–matter; 3.2 Neutrinos–information; 3.3 Matter–information) 4. Composite relation & data transfer 5. Quantum information channel 6. Quantum tokenization & AI (6.1 General approach; 6.2 Tokenization role; 6.3 AI reconstruction & “residual data”; 6.4 Effective-data vs. orthodox objection) 7-10. Proofs, statistical issues, supporting equations 11-15. AI-genetic analogy, cross-pollination, disruptive ingredients (Dirac antimatter, tokenization, generative AI) 16-19. Hypothetical protocol, trans-warp “Hyper-Portal,” conclusions & projections 20. Ramanujan–Cantor Meta-Equation (20.1-20.10) 21-23. Biblical zero-time, theological link, quantum bubbles (design, pseudocode, neutrino mesh, conclusion) |

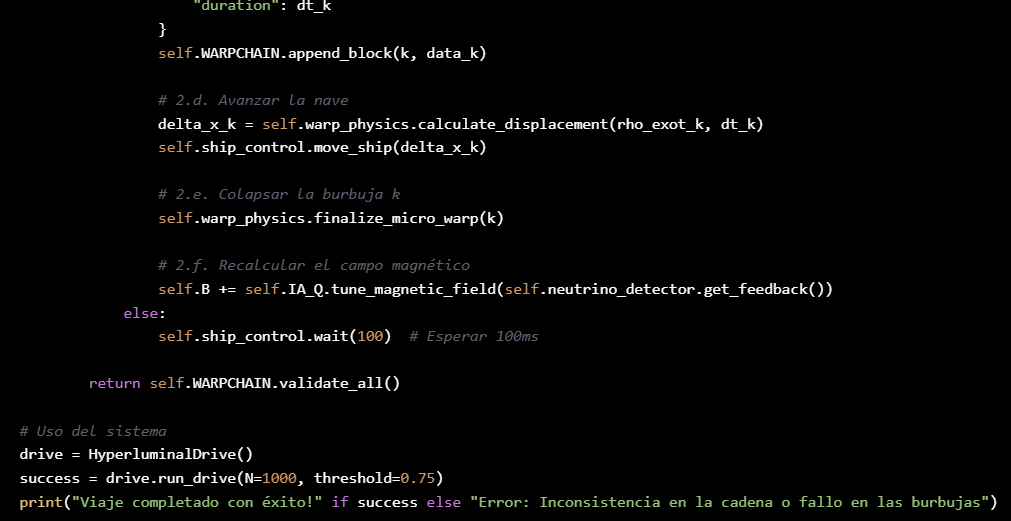

| XV | AI-Assisted Codes | 1. Script repository 2. Multiverse mathematical model |

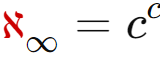

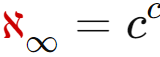

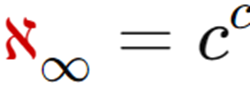

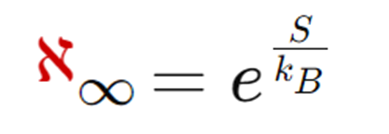

| XVI | Validations & Mathematical Aspects | 1. א∞ (Aleph-infinite) interpretation 2-4. Formal-analogy validation & simulations 5-6. Cantorian logic, Vitali & Banach–Tarski, alternative forms, final conclusion |

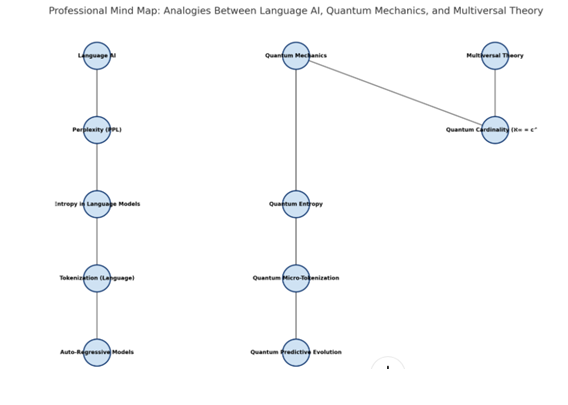

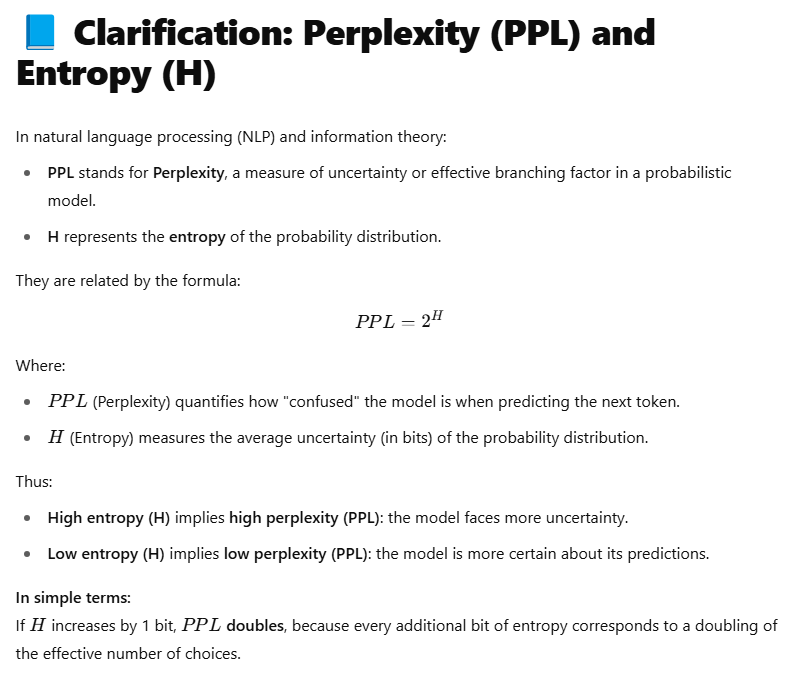

| XVII | Thematic Essay | “Geometry of the Infinite – Perplexity and א∞ = cᶜ”; link between PPL metric & multiversal complexity |

| XVIII | Meta-Summary | 1. Synoptic matrix 2. Visual synthesis 3. Specific conclusions (FTL exception, neutrinos, author’s view) 4. Global conclusions 5. Legislative/scientific/theological recommendations 6. Future protection of abstract formulas 7-10. Supporting maths, didactic & argumentative tables, cosmic threats |

| XIX | Epilogue | Final reflection, research-plan projection, “Beyond Light: Warp Routes & the Quantum Horizon,” unified table of theo-quantum knowledge, Bio-quantics & the Quantum Ark |

| Bibliography | Legal, scientific & theological references; supporting links | |

| Justification | Blocks I-IV = framework & problem • V-VIII = objectives & methods • IX-XI = legal analysis & change strategy • XII-XIV = technical-scientific core • XV = practical support • XVI-XVIII = executive meta-summary • XIX = academic traceability |

📖I. INTRODUCTION

Since the dawn of time, the UNIVERSE has been governed by an infinite set of rules configuring a matrix composed of mathematical, physical, artistic, and philosophical formulas, all oriented toward the complete understanding of how it functions. Humanity’s inexhaustible thirst for knowledge has no end, prompting mankind to devise all kinds of artifices to achieve practical applications from these discoveries, thereby deciphering all its mysteries and determining the applicable system to which we must all adhere.

This research seeks to follow in the footsteps of a constellation of brilliant minds in the fields of mathematics, physics, and the literary arts, whose purpose was to unravel the uncertainties of infinity, clarifying its true nature and seeking to distill it into a single equation — sufficiently broad yet simple — that would encapsulate the absolute whole. These outstanding minds even reached the threshold of human incomprehension among their peers, battling the severe destructive criticism and adversities of their era.

Following the teachings of Niccolò di Bernardo dei Machiavelli (Niccolò Machiavelli) in his illustrious work The Prince, I have prudently chosen to follow the paths outlined by some notable thinkers, such as Georg Ferdinand Ludwig Philipp Cantor, a forerunner of mathematical theology; Ludwig Eduard Boltzmann, in his theory of chaos and probabilities; Kurt Gödel, with his incompleteness theorem and intuitive vision of mathematics; Alan Mathison Turing, who pursued the practical application of Gödel’s theorem with relentless determination; and finally, Jorge Luis Borges, with his infinite Aleph — thus merging in a single vision three mathematicians, one physicist, and one literary figure — with the hope that my actions may, in some measure, resemble theirs.

This research adopts a theological perspective, supported by linguistic experts in ancient Hebrew and Aramaic regarding specific biblical verses, to maintain fidelity to the maternal sense of the translations, while obeying the categorical mandate expressed by mathematician Georg Cantor that the answer to his absolute and incomplete formula could not be found in mathematics, but rather in religion.

Furthermore, in compliance with the requirements of the Intellectual Property course, principal points concerning the patenting of formulas, quantum algorithms, and the practical utility of the simulated invention are addressed. Issues regarding the design, the totality of the equations, and other administrative processes are omitted for the sake of summarizing the essay.

It is the aspiration that, thanks to human evolution in different fields of science and its symbiotic relationship with Artificial Intelligence (AI), the irreversible process of generating a new form of communication that traverses new routes across the cosmos may materialize in the near future.

The original publication is referenced, in which mathematical, physical, artistic, literary, and especially religious concepts are presented, intertwined in an inseparable manner to demonstrate the creation of the simplicity of the Formula and its extraction from various biblical verses interacting like a blockchain. The corresponding footnotes are highly illustrative and must be examined carefully.

The short-term objective of this research is to achieve the issuance of a patent for the indivisible block or circuit of the invention starting from the formula, transitioning through generative AI powered by advanced machine learning algorithms, and culminating in the construction of the toroidal-energy neutrino machine. Consequently, it seeks to achieve legal patent protection, advocating the application of the «exception to the exception» principle, and also aspires to promote the use of AI to predict/»reconstruct» information before receiving all classical bits, using neutrinos as a teleportation channel.

This work has been largely synthesized for quick comprehension, omitting many annotations, reflections, designs, glossary expressions, and bibliographic citations, focusing centrally on the core subject while preserving industrial secrecy, and highlighting that most of the illustrations were created by Artificial Intelligence (AI).

Under a simulated projection, a practical utility was conferred to the formula so that it would not be classified solely in an abstract sense, thus fitting within the assumptions for intellectual property protection.

This project falls under what might be called “anticipatory heuristic engineering.” It rests on the principle that certain mathematical structures—such as the Alcubierre metric, Burelli’s fractal/tokenization extensions, or the seed function ℵ∞ = cᶜ—can precede their technological realization, much as the Schwarzschild metric did before black holes were detected. Far from contradicting the scientific method, this hypothesis operates as its speculative vanguard.

It is my hope that this essay will be to your liking, and that together we may embark on the pilgrimage through the quantum universe.

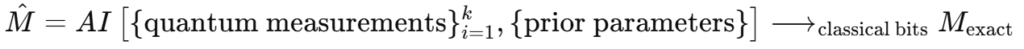

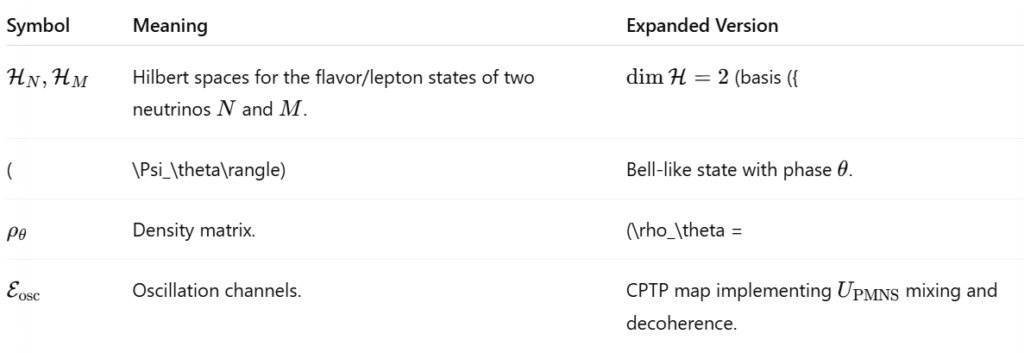

🪞II GLOSSARY

II. Glossary (narrative format)

Algorithm

A finite sequence of instructions executed in a prescribed order so a computer can perform calculations or solve a specific problem.

Modified Quantum Algorithm

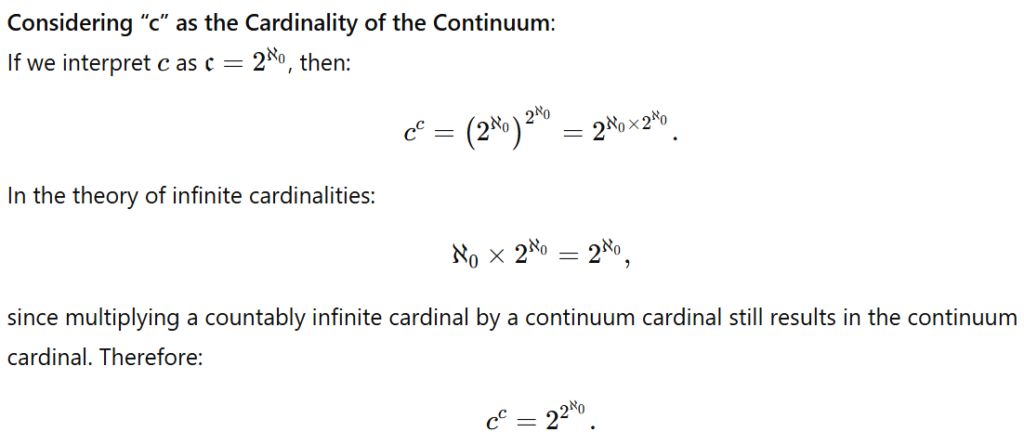

A quantum algorithm—i.e., a series of quantum gates acting on qubits, followed by measurement—revamped or newly designed to exploit uniquely quantum properties. Incorporating the seed formula ℵ∞ = cᶜ introduces “infinite‐branch” decision paths that weigh many variables simultaneously.

Alice, Bob, Eve (and friends)

Standard placeholder names in cryptography and quantum-information literature. Alice and Bob are the legitimate communicators; Eve (from “eavesdropper”) is the interceptor; additional agents such as Charlie or David appear when protocols call for mediators or extra key-holders.

Blockchain

A distributed, tamper-resistant ledger in which digital transactions are grouped into chronologically ordered blocks. Once recorded, data cannot be altered without network-wide consensus, ensuring transparency and integrity among all participants.

Temporal Loop

An anomalous region where time halts or slows drastically. Witnesses often report repetitive activity within the affected zone.

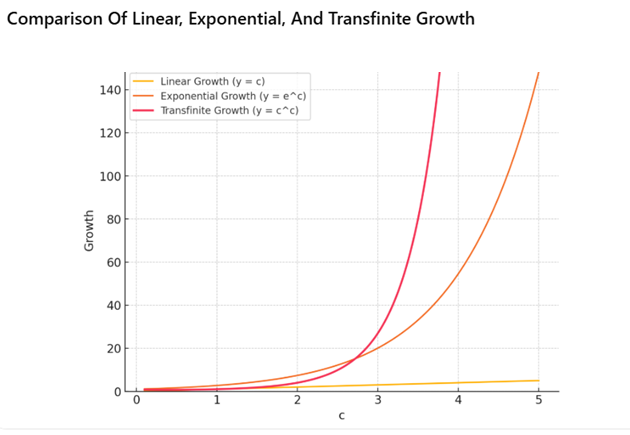

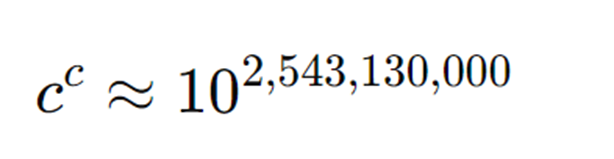

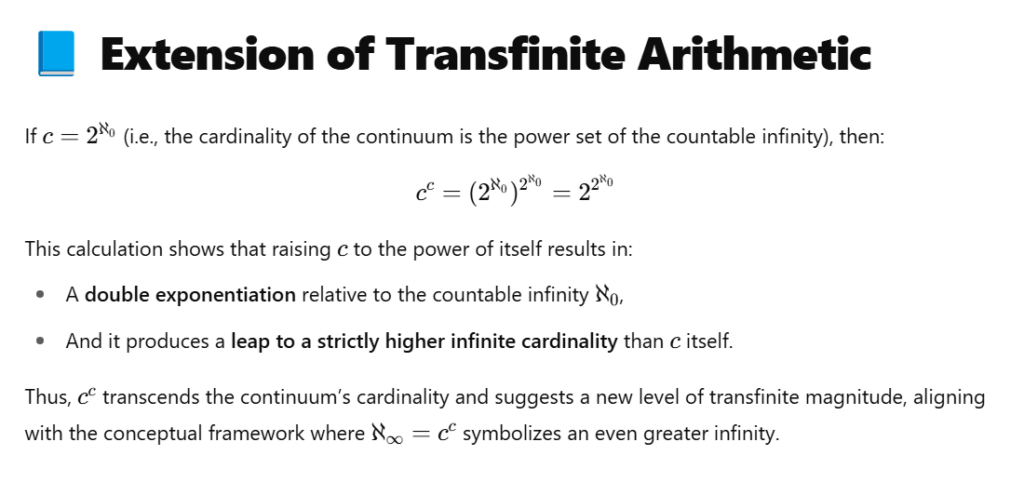

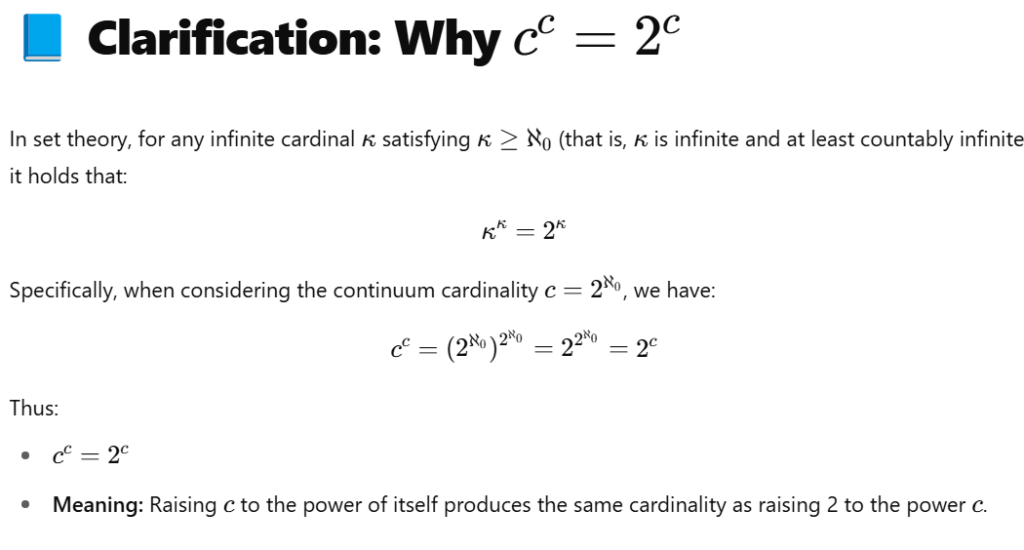

cᶜ (c raised to c)

“The speed of light to its own power.” The expression becomes a dimensionless, colossal number only after stripping c of units and has no direct role in conventional physics.

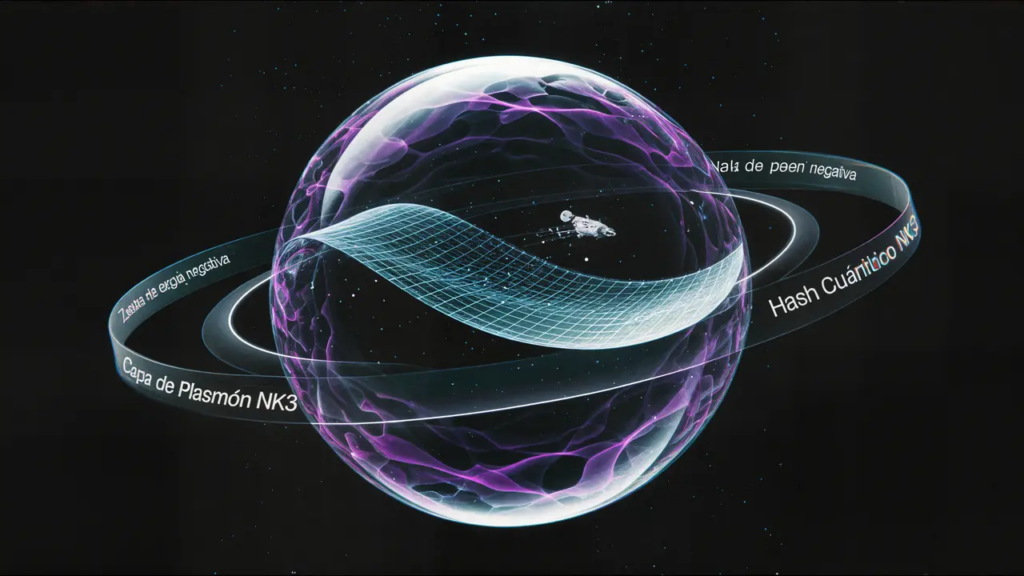

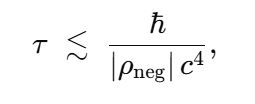

Quantum Bubble (operational definition)

A finite “island” whose vacuum energy, field states, or spacetime curvature differ from the surroundings. Three main contexts:

- False-vacuum bubble: scalar field tunnels to a true minimum; if the initial radius exceeds a critical size, the bubble expands at nearly light speed, potentially rewriting fundamental constants.

- Warp-curvature bubble: Alcubierre metric generated by negative energy density, allowing apparent super-luminal travel but demanding exotic energy and raising causality issues.

- Spacetime-foam micro-bubbles: Planck-scale topological fluctuations produce tiny regions of “nothing,” influencing quantum-gravity models.

Chris Van Den Broeck (1972– )

Belgian-Dutch theorist who proposed the 1999 “micro-warp drive,” a thinner, internally expanded version of the Alcubierre metric that slashes negative-energy requirements from galactic masses to (optimistically) stellar or even microscopic scales.

The Prince

Il Principe (c. 1513), Niccolò Machiavelli’s seminal treatise on power and statecraft.

White Dwarf

The Sun’s predicted final state: a dense, cooling stellar remnant that has exhausted its nuclear fuel and will fade ever more slowly.

Toroidal Energy / Toroid

A doughnut-shaped, self-sustaining energy vortex that continually cycles inward and outward—often invoked in metaphysics and some physics analogies as a universal, balanced flow pattern.

Quantum Entanglement

A non-classical correlation in which measuring one particle instantly determines the corresponding property of another, regardless of distance.

Neutrino Quantum Entanglement

Hypothetical entangled neutrino pairs could support long-distance quantum key distribution. Measuring the spin of one neutrino instantaneously fixes that of its partner, a feature that may enable secure communication, remote sensing, or deep-space navigation.

FTL (Faster – Than – Light)

Any purported object, signal, or effect travelling faster than light. Special relativity forbids actual information transfer above c; “FTL” claims in quantum contexts stem from correlations that disappear under careful analysis.

Reverse Engineering

Disassembling and analysing an existing product to understand its components, interactions, or manufacturing process with an eye to replication or improvement.

Artificial Intelligence (AI)

The capacity of digital systems or robots to perform tasks associated with intelligent agents—perceiving their environment, making autonomous decisions, and pursuing defined goals. Implementations may be pure software or embedded in hardware.

Fractal

A geometric figure in which a self-similar pattern repeats at multiple scales and orientations. The term, coined by Benoît Mandelbrot, derives from fractus (“broken” or “irregular”).

Dark Matter

A form of matter that neither emits nor interacts with electromagnetic radiation but reveals itself through gravitational effects. It constitutes roughly 27 % of the Universe’s mass-energy budget.

Neutrino

An electrically neutral, nearly massless fundamental particle—nicknamed the “ghost particle”—that passes through ordinary matter with minimal interaction; about 50 trillion solar neutrinos traverse each human every second.

Patent

A legal grant conferring on an inventor, for a limited time, the exclusive right to prevent others from making, using, importing, or selling the patented invention.

Solar “Supernova” (popular misnomer)

In ~5 billion years the Sun will exhaust core hydrogen, fuse helium, shed its outer layers, and become a white dwarf—an evolution distinct from a true supernova, which requires a much more massive star.

Infinite Set Theory (1895)

Georg Cantor’s late work that introduced “absolute infinity” and equated that unfathomable concept with the divine.

Transliteration

Rendering words written in one script into another script while approximating their original pronunciation, enabling readers unfamiliar with the source alphabet to vocalise the terms.

al, and at the atomic level.

🔥III.- ORIGINAL PUBLICATION DATED JUNE 26, 2015

https://perezcalzadilla.com/el-aleph-la-fisica-cuantica-y-multi-universos-2/

/English Version:

https://perezcalzadilla.com/el-aleph-la-fisica-cuantica-y-multi-universos/

1.THE ALEPH’S “א” MESSAGE, QUANTUM PHYSICS, AND MULTI-UNIVERSES.,

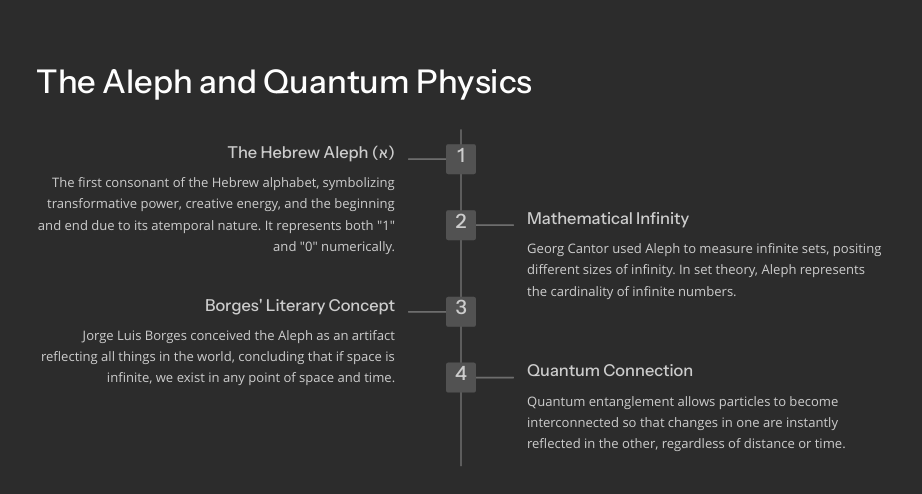

The Aleph, “א”, is the first consonant of the Hebrew alphabet [1]. It carries multiple meanings: it symbolizes transformative power, cultural force, creative or universal energy, the power of life, the channel of creation, as well as the principle and the end, due to its timeless nature.

Aleph is also the name given to the Codex Sinaiticus, a manuscript of the Bible written around the 4th century AD.

The origin of the letter «א» is traced back to the Bronze Age, around a thousand years before Christ, in the Proto-Canaanite alphabet — the earliest known ancestor of our modern alphabet. Initially, Aleph was a hieroglyph representing an ox, later transitioning into the Phoenician alphabet (Alp), the Greek alphabet (A), the Cyrillic (A), and finally the Latin (A).

In astrology, Aleph corresponds to the Zodiac sign Taurus (the Ox, Bull, or Aleph), its associated colors being white and yellow, and it is linked to Sulfur. Among Kabbalists, the sacred «ALEPH» assumes even greater sanctity, representing the Trinity within Unity, being composed of two «YOD» — one upright, one inverted — connected by an oblique line, thus forming: «א».

Aleph is a structure representing the act of taking something as nature created it and transforming it into a perfected instrument, serving higher purposes — a fiction extended over time. As the first letter of the Hebrew alphabet, Aleph holds great mystical power and magical virtue among those who adopted it. Some attribute it the numerical value “1,” while others consider its true value to be “0.” [2]

Curiously, although Aleph is the first letter, it is classified as a consonant, since Hebrew has no vowels. In the primitive form of the language, the lack of vowels invites multiple meanings for each word and maintains a certain suspense for the reader.

This absence of vowels is an artifact of Hebrew’s primitiveness and functions to sustain deferred meaning.

Thus, Aleph — unlike the Latin «A» or the Greek Alpha — simultaneously embodies the missing vowel and a vestige of the pictographic writing system it replaced.

Aleph, therefore, is a nullity, one of the earliest manifestations of «zero» in the history of civilization.

Like zero, Aleph is a meta-letter governing the code of Hebrew; because it lacks vowels, its meaning could be «nothing» [3].

Aleph also connects us to nothingness, emptiness, the place where nothing exists — a systematic ambiguity between the absence of things and the absence of signs, illustrating a semiotic phenomenon that transcends any formal system [4].

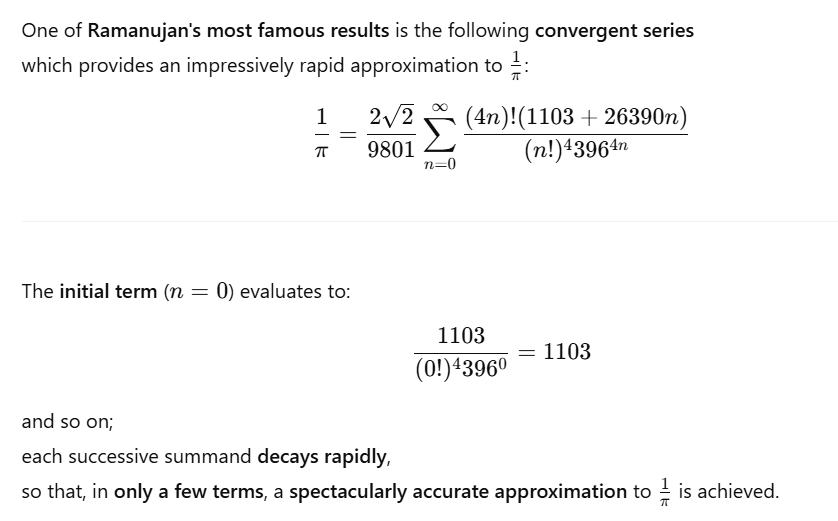

This led mathematician Georg Cantor [5] (1845–1918) to employ Aleph to measure infinite sets, defining various sizes or orders of infinity [6].

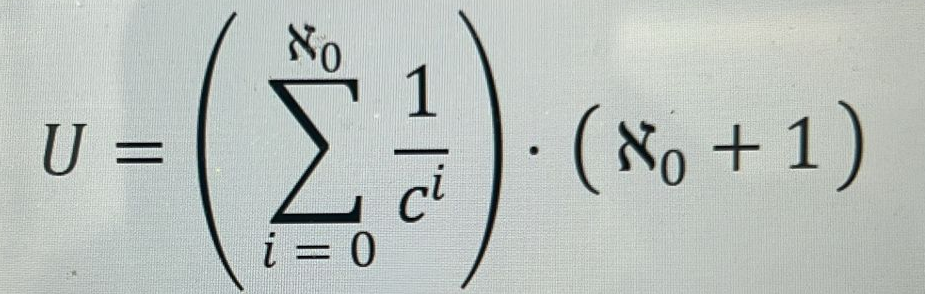

In his set theory, Aleph represents the cardinality of infinite numbers.

For example, Aleph subscript “0” (ℵ₀) denotes the cardinality of the set of natural numbers — the largest of finite cardinal numbers and the smallest of transfinite cardinal numbers.

Likewise, Jorge Luis Borges, following Cantor’s quest for the absolute infinite [7], conceived Aleph as an artifact wherein all things in the world were reflected simultaneously [8], concluding that if space is infinite, we are at any point in space, and if time is infinite, we are at any point in time (The Book of Sand, 1975).

Borges also warned severely about the dangers inherent in the pursuit of infinity.

[9] Cantor’s natural successor, the mathematical logician Ludwig Eduard Boltzmann (1844–1906), later followed by Alan Mathison Turing [10], sought to frame infinity within a timeless structure.

Even in contemporary times, Aleph inspired Paulo Coelho in his work Aleph, where he narrates that it is the point where all the energy of the Universe — past, present, and future — is concentrated.

Perhaps this notion of nothingness also explains why the first word of the Bible, in Genesis, begins not with Aleph but with Beth («Bereshit»), a feminine-sense letter, even though Aleph is the first letter of the Hebrew alphabet.

Furthermore, the Hebrew pronunciation of Aleph yields a long «A» sound, corresponding to the Greek Eta with rough breathing («H»).

The consonant form of Aleph when pronounced with a long «E» corresponds to the Greek letters AI (Lambda-Iota).

Hebrew “Yod” corresponds to a slight «AH» deviation in sound relative to the Greek Alpha.

Hebrew Vav («HYOU») has no Greek equivalent, given that masculine names in Greek typically close with a consonant («S», or less frequently «N» or «R»).

This phonetic shift produced names like «Elias» (ΗΛΙΑΣ / HLIAS).

Thus, Aleph is intimately linked to the prophet Elias, who, like Enoch (Genesis 5:18–24; Hebrews 11:5), did not die [12] but was carried alive into Heaven.

As the Bible says, Elijah was taken up by a chariot of fire and four horses of fire (2 Kings 2:1).

Elijah of Tishbe is one of the most fascinating figures in Scripture, appearing suddenly in 1 Kings without a genealogical record.

His role is crucial: he is the forerunner foretold in Malachi, heralding both the first and final comings of the Messiah.

In Matthew 11:14, Jesus reveals to his disciples that Elijah had already come — referring to John the Baptist.

Some theologians view these passages as evidence of reincarnation.

However, through the lens of modern science, it could be seen not as reincarnation but as a remote antecedent to quantum teleportation.

Scientists today have managed to teleport an entire laser beam containing a message across 143 kilometers, using principles of quantum physics.

Moreover, Israeli physicists recently entangled two photons that never coexisted in time, verifying entanglement beyond temporal barriers.

Quantum entanglement defies classical physics: two particles (such as photons) become connected so that any change in one is instantly reflected in the other, regardless of distance or temporal separation [13].

It would be extremely promising to explore hydrogen photons or neutrinos for quantum entanglement applications [14], given hydrogen’s primacy and abundance in the universe.

Is it not strikingly similar — the scientific phenomena of crossed laser beams and quantum teleportation — to the way the prophet Elijah was transported via fiery horses (symbolizing massive energetic forces)?

In the future, these quantum phenomena will likely become the foundation for perfected quantum computers and instantaneous quantum communication systems.

Analyzing Genesis 1:3 —

“וַיֹּאמֶר אֱלֹהִים יְהִי אוֹר וַיְהִי–אוֹר (Vayomer Elohim Yehi Or Vayehi-Or)”

— reveals three temporal dimensions:

- Yehi (Future Tense)

- Vayehi (Past Tense)

- Present Tense (implied, as Hebrew grammar tacitly conjugates the present tense).

The numerical value of the Hebrew word for Light, «OR» (אור), is 207 (a multiple of 3).

Inserting a «Yod» between the second and third letters yields «AVIR,» meaning «Ether,» the domain that supports the entire Creation.

The legacy of Georg Cantor, seeking a formula to encompass infinity, insisted that ultimate answers are found not in mathematics but in the biblical scriptures.

Psalm 139:16 states:

«Your eyes saw my substance, being yet unformed. And in Your book they all were written, the days fashioned for me, when as yet there were none of them.»

This suggests that the quest for the absolute whole is deeply tied to Genesis.

If Aleph symbolizes the Universe and is intimately connected to the Creator, we may thus conclude that:

א = C + C + C + [18]

Undoubtedly, the Aleph provides the keys to expand the limits of reality and potential, reaching toward the Ein Sof [19] or the Multiverse [20][21][22].

(By PEDRO LUIS PÉREZ BURELLI — perezburelli@perezcalzadilla.com)

2 Brief Notes:

[1] During the period of the Temple under Roman rule, the people communicated colloquially in Aramaic for their daily tasks and work; however, in the Temple, they spoke exclusively in Hebrew, which earned it the designation «Lashon Hakodesh» — the Holy Language.

[2] The mathematical value of Aleph is dual; according to exegesis, it is binary [0, 1].

[3] Aleph, as a Hebrew letter, although it cannot be articulated itself, enables the articulation of all other letters, and by linguistic-literary extrapolation, it encapsulates the Universe within itself.

[4] Mathematician Kurt Gödel (1906–1978) argued that whatever system may exist, the mind transcends it, because one uses the mind to establish the system — but once established, the mind is able to reach truth beyond logic, independently of any empirical observation, through mathematical intuition.

This suggests that within any system — and thus any finite system — the mind surpasses it and is oriented toward another system, which in turn depends on another, and so on ad infinitum.

[5] Georg Cantor was a pure mathematician who created a transfinite epistemic system and worked on the abstract concepts of set theory and cardinality.

It was through his work that the realization emerged that infinities are infinite in themselves.

The first of these «infinities of infinities» discovered by Cantor is the so-called «Aleph,» which also inspired Jorge Luis Borges’ story of the same name.

From this also emerged the notion of the «Continuum.»

[6] In his interpretation of the absolute infinity, supported within a religious framework, Georg Cantor first used the Hebrew letter «Aleph,» followed by the subscript zero, ℵ₀, to denote the cardinal number of the set of natural numbers.

This number has properties that, under traditional Aristotelian logic, seem paradoxical:

- ℵ₀ + 1 = ℵ₀

- ℵ₀ + ℵ₀ = ℵ₀

- (ℵ₀)² = ℵ₀

It is somewhat similar to the velocity addition law in Special Relativity, where c + c = c (with c being the speed of light).

In set theory, infinity is related to the cardinalities and sizes of sets, while in relativity, infinity appears in the context of space, time, and energy of the universe.

Here there is an attempt to unify both formulas, considering that such unification is more a conceptual representation than a strict mathematical equation, as it combines concepts from different theoretical frameworks.

The pursuit of unification into an absolute is not an exclusive domain of mathematics; it also extends to physics, specifically to the conception of the unification theory of the four fundamental forces: gravity, electromagnetism, the strong nuclear force, and the weak nuclear force.

[7] The Cantorian infinite set is defined as follows: «An infinite set is a set that can be placed into a one-to-one correspondence with a proper subset of itself» — meaning that each element of the subset can be directly paired with an element of the original set. Consequently, the entire cosmos must comply with the axiom that postulates the equivalence between the whole and the part.

[8] Jorge Luis Borges sought to find an object that could contain within itself all cosmic space, just as in eternity, all time (past, present, and future) coexists. He describes this in his extraordinary story «The Aleph,» published in Sur magazine in 1945 in Buenos Aires, Argentina.

Borges reminds us that the Aleph is a small iridescent sphere, limited by a diameter of two or three centimeters, yet containing the entire universe.

It is indubitable evidence of the Infinite: although physically limited by its diameter, the sphere contains as many points as infinite space itself, and later Borges represents this idea again in the form of a hexagon in The Library of Babel.

[9] «We dream of the infinite, but reaching it — in space, time, memory, or consciousness — would destroy us.»

Borges implies that the infinite is a constant chaos and that attaining it would annihilate us, because humanity is confined by space, time, and death for a reason: without such limits, our actions would lose their meaning, as we would no longer weigh them with the awareness of mortality.

For Borges, the Infinite is not only unreachable; even any part of it is inconceivable.

This vision aligns with Kurt Gödel’s Incompleteness Theorem (1906–1978), which asserts that within any logical system, there will always be irresolvable problems.

In the works of Borges and Federico Andahazi (The Secret of the Flamingos, Buenos Aires: Planeta, 2002), a significant comment emerges:

If it were possible to attain an Aleph, human life would lose its meaning.

Life’s value greatly depends on the capacity for wonder: resolving uncertainties creates new mysteries.

After all, finding an absolute implies reaching a point of maximum depth and maximum sense — and ceasing to be interesting.

This warning resonates with Acts 1:7:

«It is not for you to know the times or dates the Father has set by His own authority.»

And Deuteronomy 4:32 urges reflection on the unreachable nature of divine acts.

Matthew 24:36 further confirms:

«But about that day or hour no one knows, not even the angels in heaven, nor the Son, but only the Father.»

Additionally, Rabbi Dr. Philip S. Berg, in The Power of the Alef-Bet, states:

«If we lived in a world where there was little change, boredom would soon set in. Humanity would lack motivation to improve. Conversely, if our universe were completely random, we would have no way to know which steps to take.»

This reflection is also echoed in Ecclesiastes 7:14:

«When times are good, be happy; but when times are bad, consider: God has made the one as well as the other, so that no one can discover anything about their future.»

[10] Ludwig Boltzmann (1844–1906) mathematically expressed the concept of entropy from a probabilistic perspective in 1877.

The tireless search for mathematical truths continued with Alan Mathison Turing.

The tendency to encapsulate infinity within a timeless framework is not unique to science; it extends to the arts.

William Blake (1757–1827), in The Marriage of Heaven and Hell (1790–1793), poetically addresses the Infinite:

«To see the world in a grain of sand,

And Heaven in a wild flower,

Hold infinity in the palm of your hand,

And eternity in an hour.»

[11] The prophet Elijah (El-Yahu)’s name is composed of two Sacred Names:

- El (mercy, Chesed)

- Yahu (compassion, Tiferet)

Elijah is intimately connected to the ordering of chaos through light on the first day of Creation.

His name is spelled: Aleph (א), Lamed (ל), Yod (י), He (ה), Vav (ו) — and contains elements of the Tetragrammaton.

Aleph, notably, is a silent letter.

Psalm 118:27 reads:

«The LORD is God, and He has made His light shine upon us.»

The consonants align with Elijah’s name, illustrating his connection to divine illumination.

Hebrew is a dual language of letters and numbers (Sacred Arithmetic).

Each letter has a numerical value, linking words through spiritual consciousness.

- The word «Light» (Aleph, Vav, Resh) = 207 + 1 (integrality) = 208.

- «Elijah» (Aleph, Lamed, Yod, He, Vav) = 52, and 52 × 4 = 208.

Thus, there is a mathematical identity between Light and Elijah.

The multiplication factor of 4 is explained by the story of Pinchas, son of Eleazar, grandson of Aaron.

In the Pentateuch, Pinchas halts a deadly plague, is granted an «Everlasting Covenant,» and becomes identified with Elijah.

The Kabbalah explains that Pinchas received the two souls of Nadab and Abihu (Aaron’s sons who died offering unauthorized fire).

When Elijah transfers his wisdom to Elisha, Elisha requests «a double portion» of Elijah’s spirit.

The inserted Hebrew word «Na» («please») hints at Nadab and Abihu (initials N and A).

Thus:

- 2 souls × 2 (double spirit) = 4

- 52 × 4 = 208 (identity of Light and Elijah).

[12] The prophet Elijah escapes the law of Entropy — a key concept in the Second Law of Thermodynamics stating that disorder increases over time.

[13] The technique used by Israeli physicists to entangle two photons that never coexisted in time involves:

- First entangling photons «1» and «2»

- Measuring and destroying «1» but preserving the state in «2»

- Then creating a new pair («3» and «4») and entangling «3» with «2»

- Thus, «1» and «4» (which never coexisted) become entangled.

This shows that entanglement transcends space and time, implying the appearance of a wormhole — a tunnel-like bridge in spacetime.

Potential applications could revolutionize quantum communication and instantaneous data transfer without physical transmission.

[14] The Bohr model for hydrogen describes quantized electron transitions, where photon emission occurs between discrete energy levels (n).

[15] What «light» does Genesis 1:3 refer to?

It refers to a special light — part of the Creator Himself — different from the visible light created on the fourth day (Genesis 1:14).

See also 1 Timothy 6:16, describing the Creator dwelling in «unapproachable light.»

[16] Paradox: the union of two seemingly irreconcilable ideas.

[17] «Universe,» here, is understood in the Borgesian sense: «the totality of all created things«, synonymous with cosmos.

[18] The formula א = C + C + C + approximates infinity, where «c» is the speed of light in its three temporal states: future, present, and past.

[19] Ein Sof: the absolutely infinite God in Kabbalistic doctrine.

[20] Stephen Hawking (The Grand Design) asserts the existence of multiple universes — possibly with different physical laws.

[21] Ephesians reminds us that we have a limited number of days:

«Be very careful, then, how you live — not as unwise but as wise, making the most of every opportunity, because the days are evil.» (Ephesians 5:15–16)

[22] The coexistence of multiple universes—and their capacity to interact—is a quantum-physics hypothesis. It proposes that the sum of all dimensions constitutes an infinite set, with each dimensional subset vibrating at its own unique oscillation frequency, distinct from those of every other universe. These intrinsic frequencies initially keep each of the universes isolated within the overarching structure. Nevertheless, if every point in space-time belongs to a common sub-structure—termed the Universe and framed by fractal geometry—then interaction, relationships, and even communication between universes become possible whenever modifications arise in the space-time fabric. Such anomalies establish the principle of Dimensional Simultaneity, which applies to particle physics and has been observed in the following instances:

- Subatomic particles, such as electrons, can occupy different positions simultaneously within the same orbital.

- Elementary particles, such as neutrinos, can traverse paths that last longer than their mean lifetime.

- Fundamental particles, such as quarks and leptons, can occupy the same location at the same time, making their material and energetic effects indistinguishable.

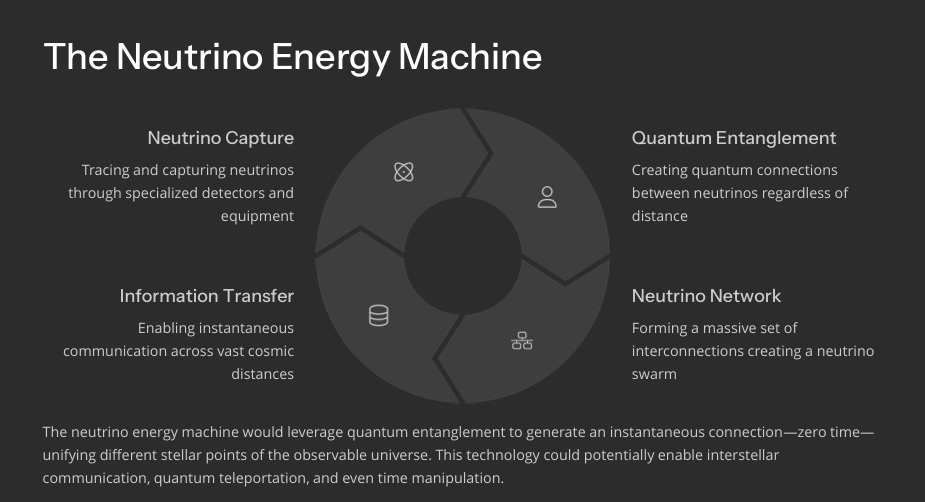

3. NEUTRINO SWARM

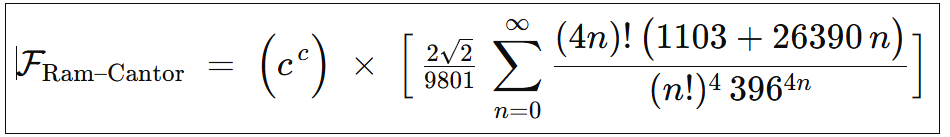

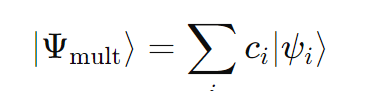

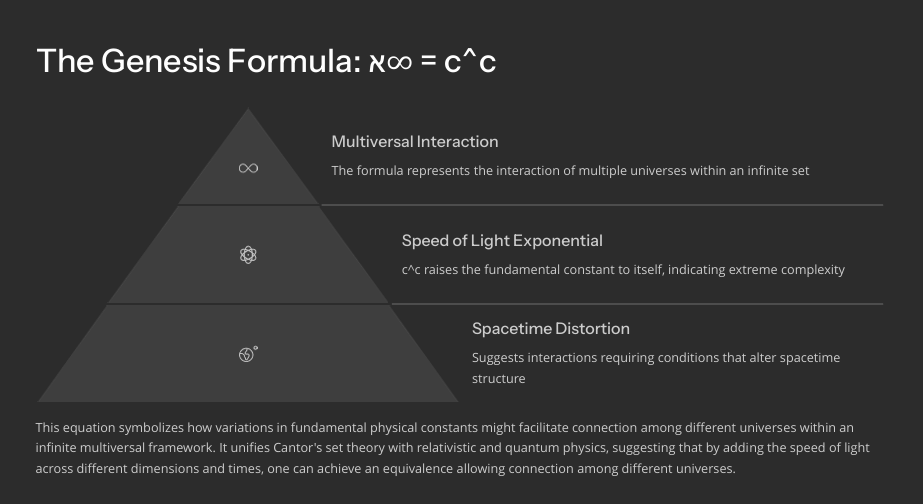

To achieve this correspondence between dimensional sets, unifying within an infinitesimal moment the simultaneity of the individual frequencies of each universe belonging to each Cantorian set, thereby materializing the axiomatic equivalence between the whole and its part, it is necessary for the additions of the speed of light to reach such magnitudes that they generate the corresponding spacetime anomaly within the universe, thus configuring an interface that enables interaction between different universes.

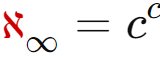

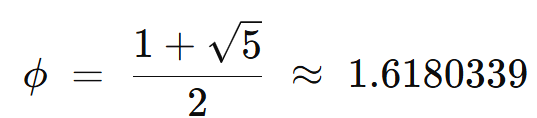

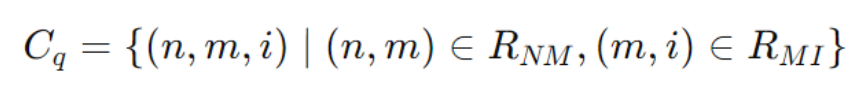

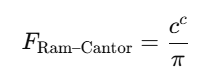

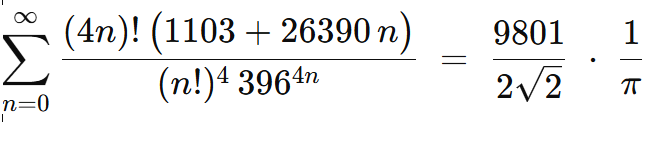

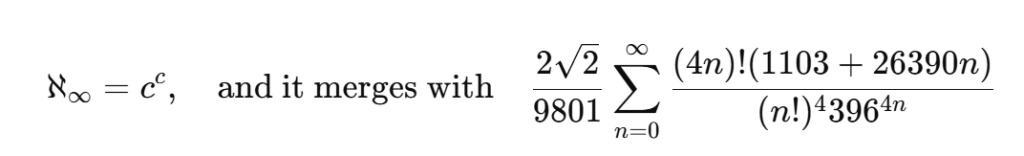

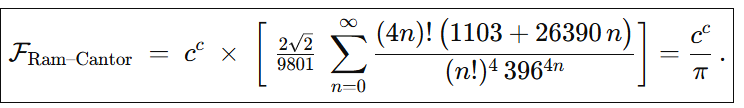

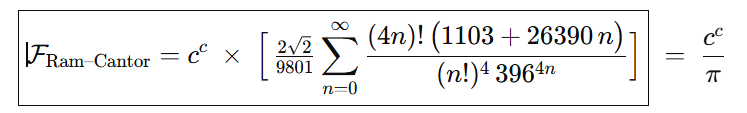

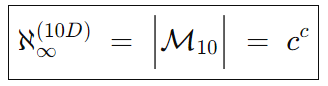

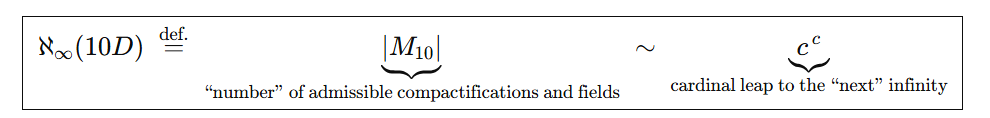

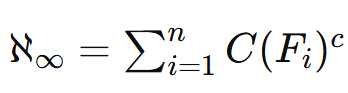

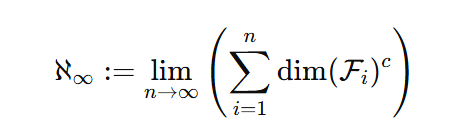

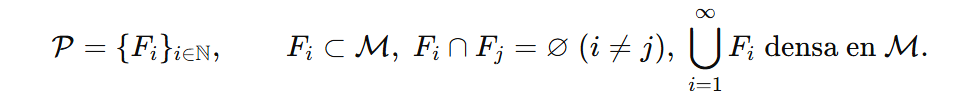

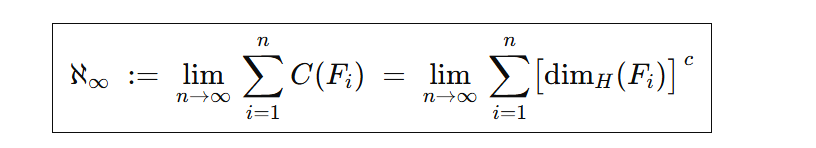

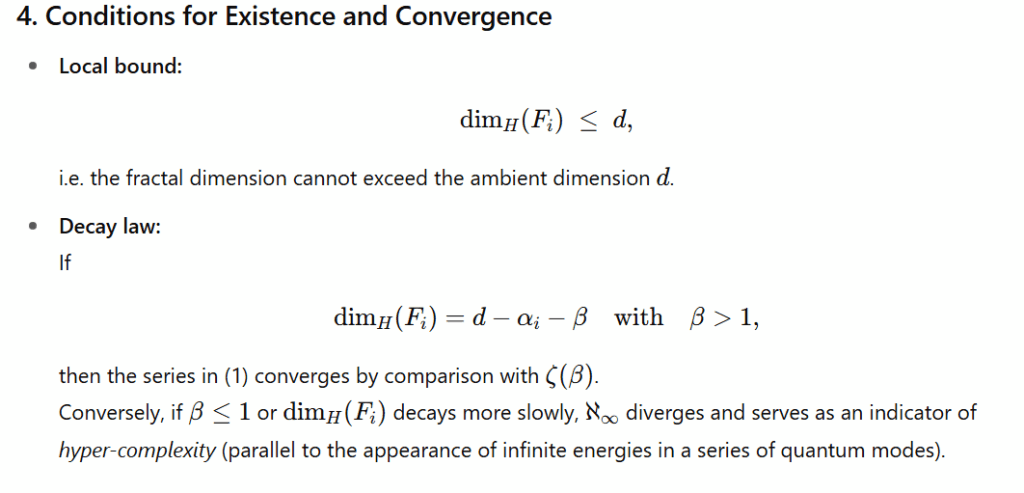

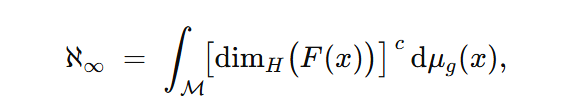

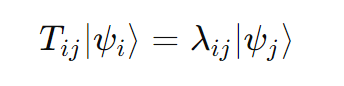

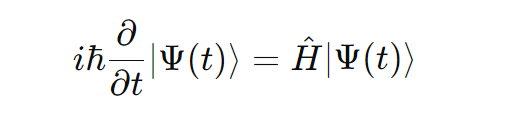

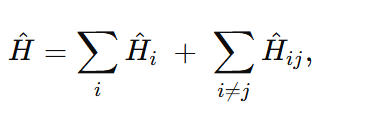

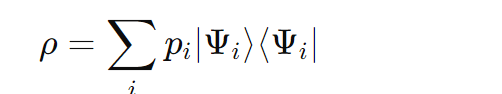

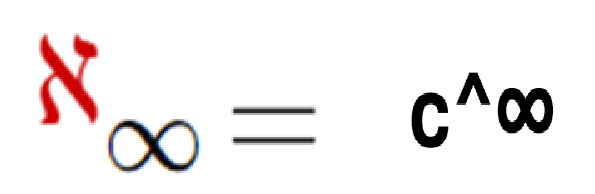

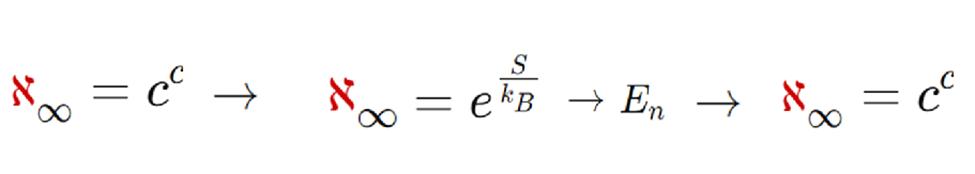

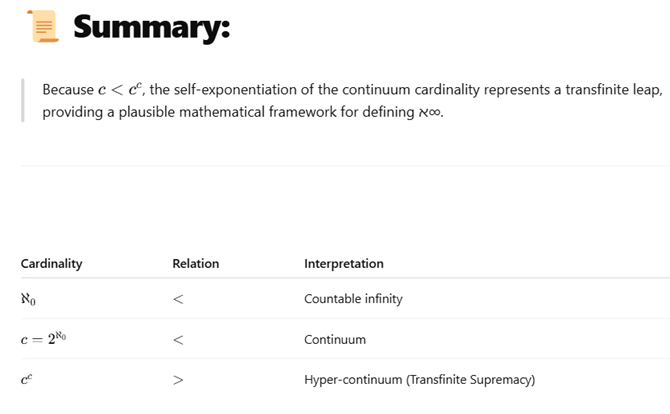

We could represent this conclusion by the following equation or formula:

Where:

- «א∞» represents the interaction of two or more multiverses belonging to an infinite set or subset.

- «c» stands for the speed of light raised to its own power (self-exponentiation).

Summary Interpretation:

This formula establishes a relationship between multiverse interaction and the speed of light, suggesting that such correlation generates a spacetime distortion proportional to the «amplification» of the speed of light.

If the interaction of all multiverses is executed within a single unit, it implies that all dimensions converge into an absolute whole, which would demonstrate an omnipresent power.

This equation attempts to unify:

- Cantorian set theory,

- Relativistic physics, and

- Quantum mechanics,

suggesting that through the addition of the speed of light (c^c) across different dimensions and times, one could reach an equivalence that allows the linkage between different universes within a common spacetime framework.

This formulation seeks to capture the essence of infinity and divine omnipresence, integrating physical and theological concepts into a single expression that symbolizes the unity of all dimensions and the interaction of multiverses within an infinite and absolute framework.

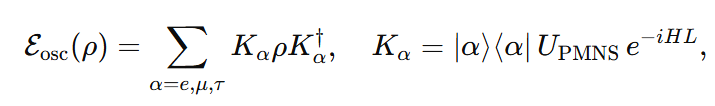

4. Table: Biblical Passages and Quantum Resonances — Instantaneity, Eternity, and Access to Infinity

Below is a table that relates various Bible passages or verses to the core ideas presented (time travel, quantum entanglement, «quantum tokenization,» zero-time data transmission, etc.).

Brief notes on Hebrew or Aramaic are included where relevant, and an explanation is given of the possible analogy or resonance between the biblical text and quantum-philosophical concepts.

| Passage | Text / Summary | Relation to Quantum Ideas | Theological / Language Notes |

|---|---|---|---|

| Genesis 1:3 | «And God said, ‘Let there be light,’ and there was light.» Hebrew: וַיֹּאמֶר אֱלֹהִים יְהִי אוֹר וַיְהִי־אוֹר | The creative word («amar» — «said») instantaneously activates light; «yehi or» is a performative act. | Emergence of a quantum state by wavefunction collapse; light as primordial information. “יהי” (yehi) is in jussive-imperative form. |

| 2 Peter 3:8 | «With the Lord, a day is like a thousand years, and a thousand years are like a day.» | Highlights divine temporal relativity. | Spacetime flexibility: relativity and quantum simultaneity disrupt human linear perception. Echoes Psalm 90:4. |

| Colossians 1:17 | «He is before all things, and in Him all things hold together.» | Christ precedes and sustains all creation. | «συνίστημι» (synístēmi) = «to hold together,» evoking universal entanglement. |

| Hebrews 11:5 / Genesis 5:24 | Enoch «walked with God and disappeared.» | Mysterious translation without conventional death. | Suggests dimensional jump or existential teleportation. “אֵינֶנּוּ” (enénnu) = «he is no longer.» |

| Exodus 3:14 | «I Am that I Am» — אֶהְיֶה אֲשֶׁר אֶהְיֶה | God reveals Himself as self-existent and eternally present. | Points to an «absolute present,» similar to quantum superposition. “אֶהְיֶה” (Ehyeh) = «I will be / I am being» (continuous aspect). |

| Revelation 1:8 | «I am the Alpha and the Omega, says the Lord God, who is, and who was, and who is to come, the Almighty.» (cf. Revelation 10:6: «There will be no more time.») | Christ proclaims total dominion over past, present, and future. | Dual perspective: (i) Physical plane: cosmic cycle (Big Bang → Big Crunch). (ii) Spiritual plane: absolute continuum beyond time — multiverse or En‑Sof. |

| Isaiah 46:10 | «Declaring the end from the beginning…» | God foreknows and proclaims all events in advance. | Mirrors a «total state» where all possibilities are pre-contemplated. «מֵרֵאשִׁית… אַחֲרִית» emphasizes omniscience. |

| John 8:58 | «Before Abraham was, I Am.» | Jesus asserts pre-existence beyond time. | Suggests time simultaneity, comparable to quantum superposition. “ἐγὼ εἰμί” emphasizes timelessness. |

| Hebrews 11:3 | «What is seen was made from what is not visible.» | Visible universe emerges from the invisible. | Resonates with quantum reality: information collapses into visibility. «μὴ ἐκ φαινομένων» = «not from visible things.» |

| Revelation 10:6 | «…that there should be time no longer.» | Final cessation of chronological time. | Refers to an absolute end state: the chronos ceases and fullness ensues. “χρόνος οὐκέτι ἔσται.” |

And to conclude:

«The Creator was born before time, has neither beginning nor end, and His greatest work is the boundless gift of happiness to humanity.»

Prepared by:

PEDRO LUIS PÉREZ BURELLI

5. 2024 Update Note

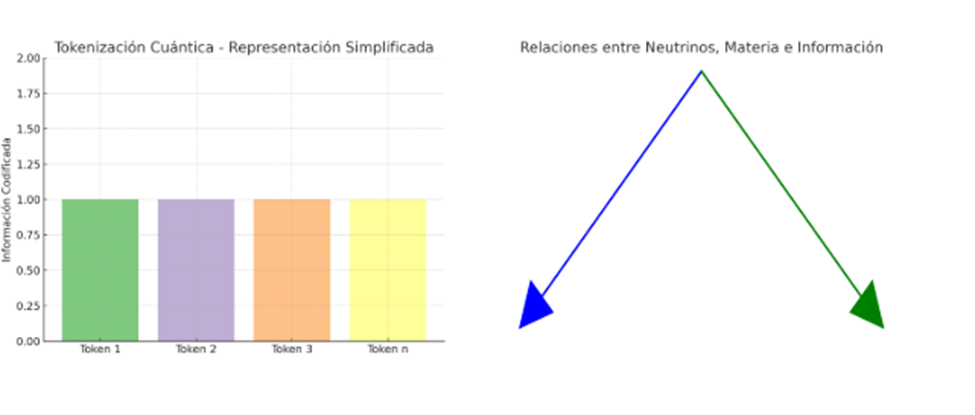

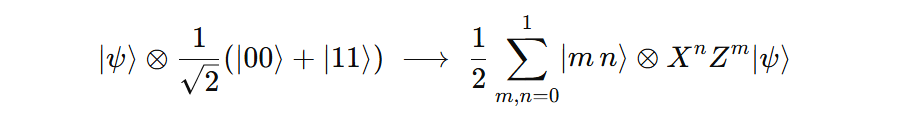

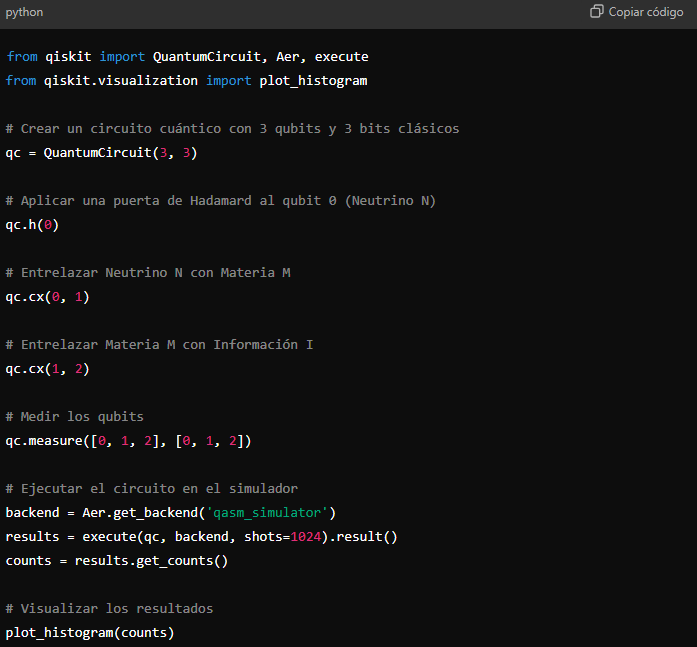

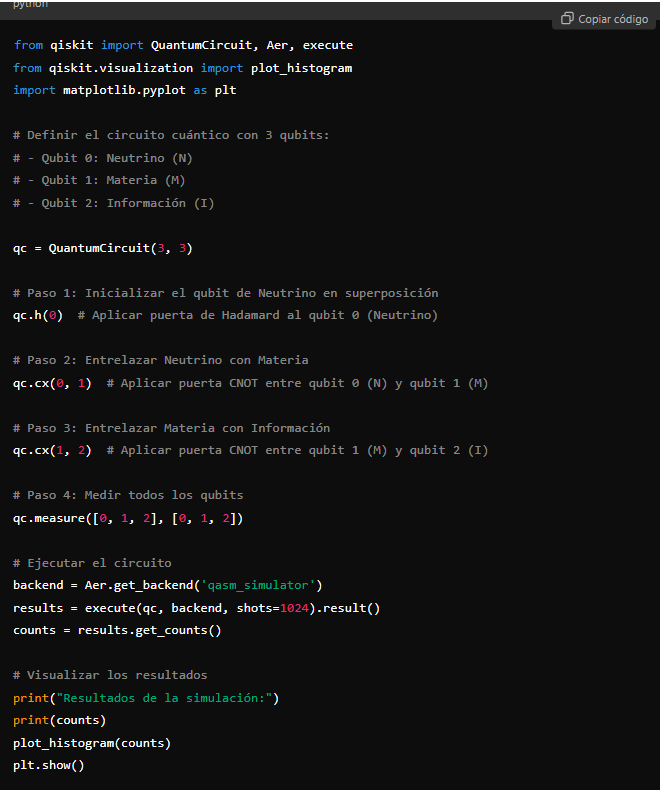

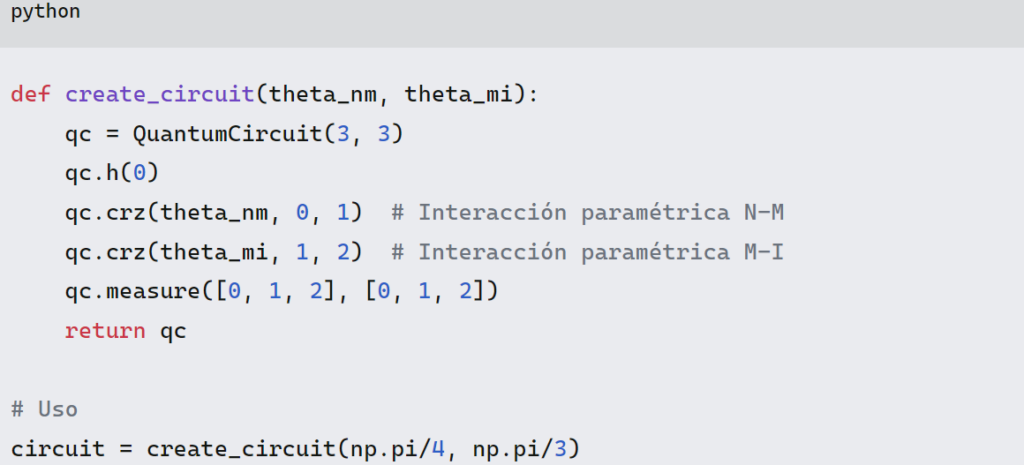

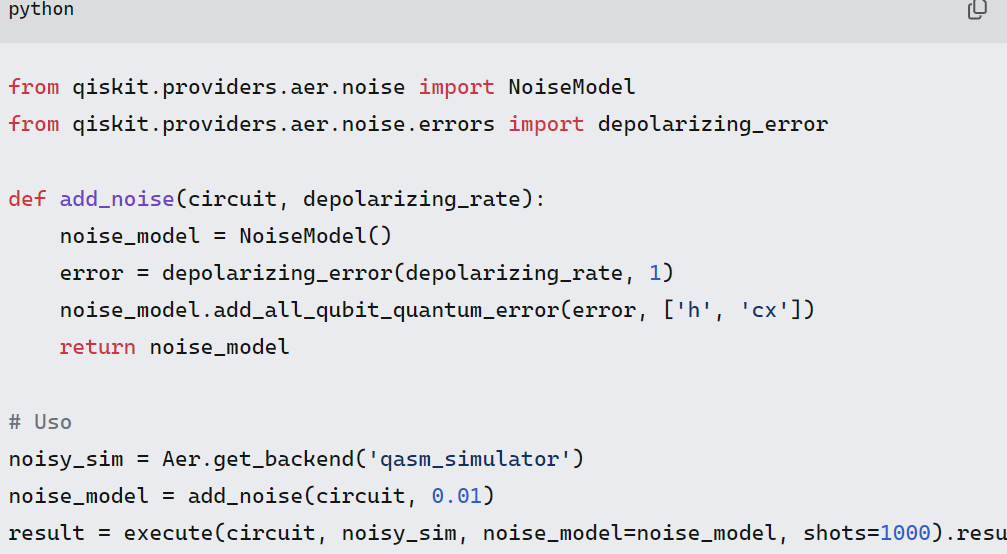

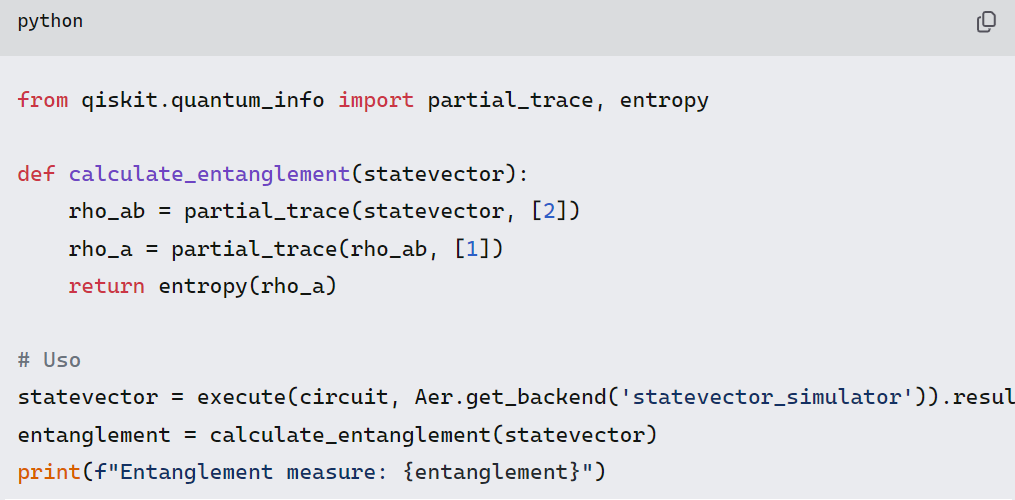

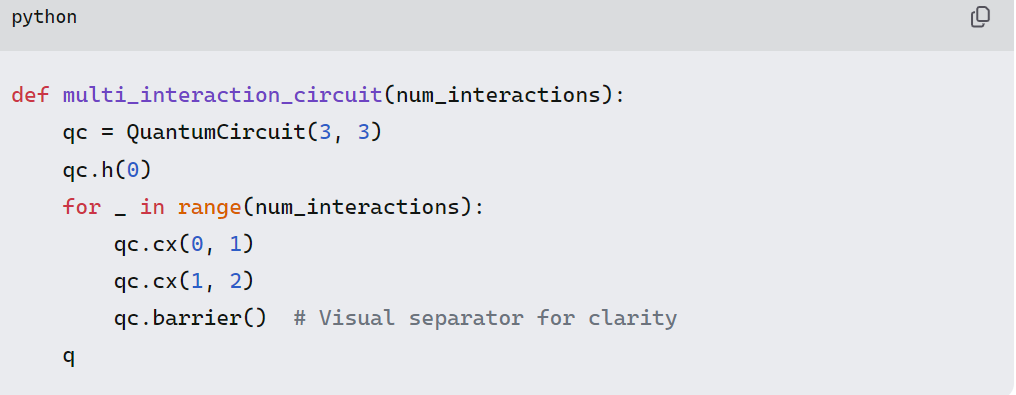

5.1 Representation in Programming Languages for Quantum Computers

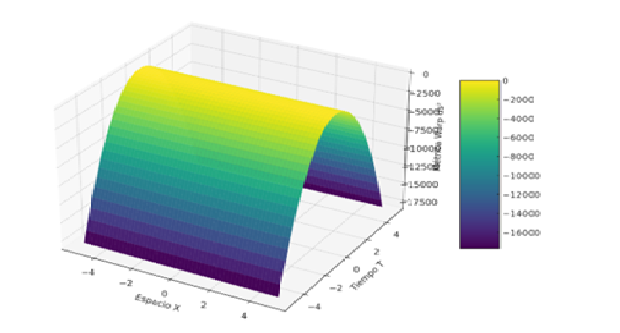

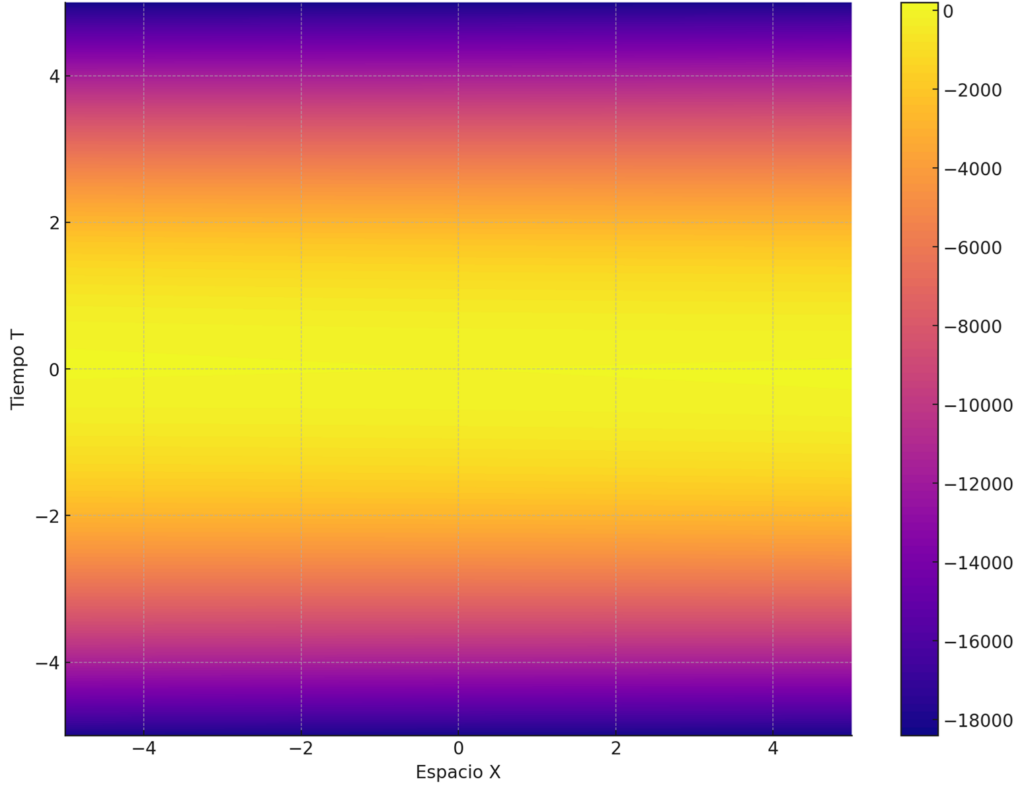

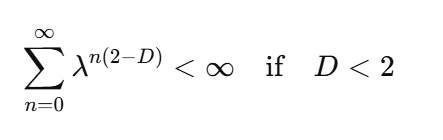

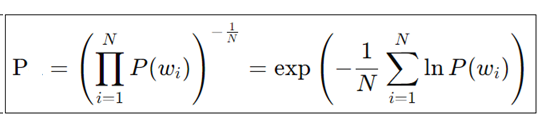

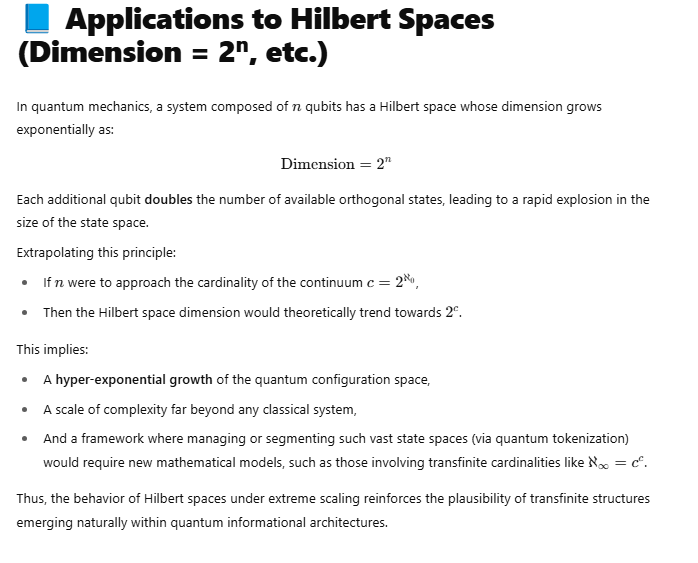

Although the formula א∞ = c^c is conceptual and not derived from empirically established physical laws, we can explore how quantum computers might simulate or represent complex systems related to these ideas.

a) Limitations and Considerations

- Representation of Infinity:

Quantum computers operate with finite resources (qubits); therefore, directly representing infinite cardinalities is currently unfeasible. - Exponentiation of Physical Constants:

Raising the speed of light (c) to its own power (c^c) yields an extraordinarily large value, which lacks experimental validation within current physical theories.

b) Quantum Simulation of Complex Systems

Quantum computers are particularly well suited for simulating highly complex quantum systems.

Through quantum simulation algorithms, it is possible to model intricate interactions and explore behavior patterns in systems that are otherwise computationally intractable.

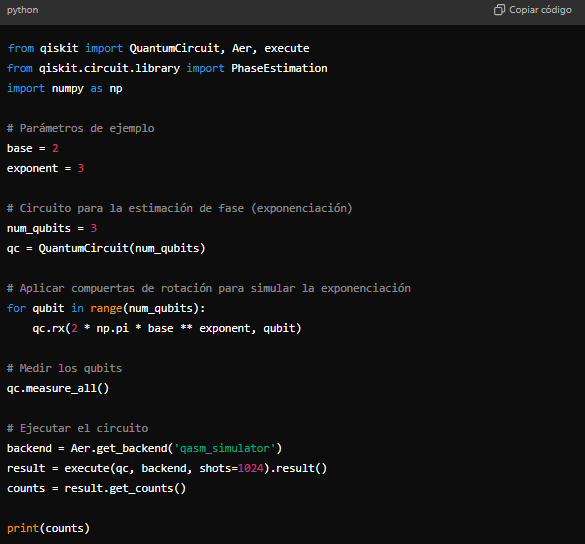

c) Exponentiation in Quantum Computing

We cannot currently calculate c^c directly, though we can explore exponentiation in quantum systems.

Example:

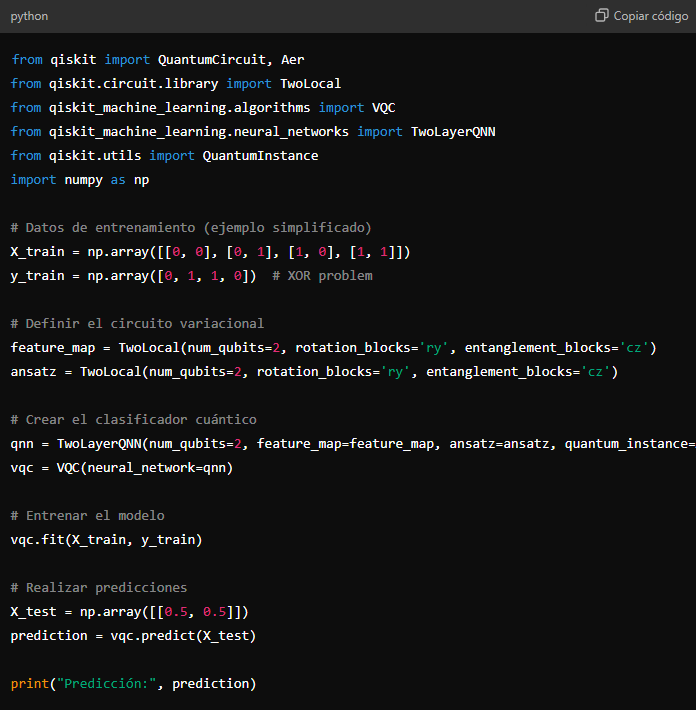

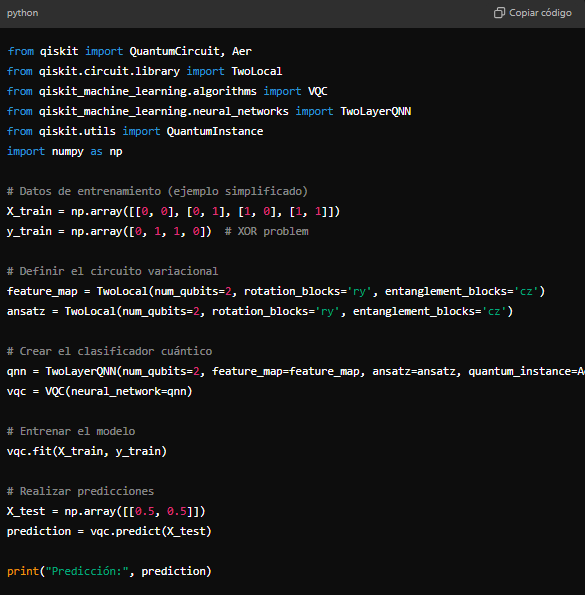

5.2. Applications in Quantum AI

a) Quantum Machine Learning Algorithms

Quantum computing opens up powerful possibilities for machine learning by exploiting the principles of superposition, entanglement, and interference to encode and process complex datasets far beyond classical capabilities.

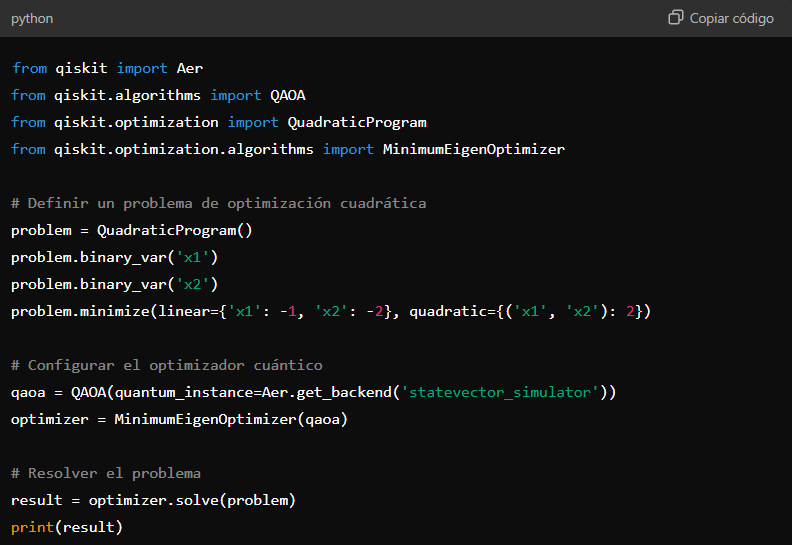

b) Quantum Optimization

Algorithms like the Quantum Approximate Optimization Algorithm (QAOA) can address complex problems more efficiently.

Example:

5.3. Conceptual Integration and AI Evolution

The equation א∞ = cc is more conceptual than mathematical, inspiring us to consider how artificial intelligence and quantum computing can evolve together:

Complex Information Processing: The ability of quantum computers to handle superposition and entanglement allows for parallel processing of vast amounts of information.

Quantum Deep Learning: Implementing quantum neural networks can lead to significant breakthroughs in machine learning.

Simulation of Natural Quantum Systems: Modeling complex physical phenomena can lead to a better understanding and new technologies.

THE EXPLORATION OF CONCEPTS SUCH AS INFINITY AND THE UNIFICATION OF PHYSICAL AND MATHEMATICAL THEORIES MOTIVATES US TO PUSH THE BOUNDARIES OF SCIENCE AND TECHNOLOGY, GUIDED BY THE PRINCIPLES OF THEOLOGY AND BIBLICAL VERSES. THANKS TO THE WISDOM CONTAINED IN THE SCRIPTURES, WE CAN FIND PARALLELS BETWEEN RELIGIOUS TEACHINGS AND QUANTUM SCIENCE. THROUGH QUANTUM COMPUTING AND ARTIFICIAL INTELLIGENCE, WE COME CLOSER TO SOLVING COMPLEX PROBLEMS AND DISCOVERING NEW KNOWLEDGE, FOLLOWING A PATH THAT NOT ONLY DRIVES TECHNOLOGICAL AND SCIENTIFIC ADVANCEMENT BUT ALSO STRENGTHENS THE SPIRITUAL AND DIVINE PURPOSE UNDERLYING ALL CREATION.

🌐IV.- THE PROBLEMIV. THE PROBLEM

1. Introduction to the Problem

Human beings are a species that has always sought to evolve toward their best version, guided by the desire to understand their environment from the particular to the general, optimizing new routes of communication — and the universe is no exception to this pursuit. Humanity, driven by its constant persistence, strives to find new ecosystems for future colonization.

One of the greatest tools available to humanity in this century is Artificial Intelligence (AI), which operates through the use of complex mathematical and physical formulas and algorithms to process large volumes of data and provide solutions to the problems posed.

Humankind has taken a significant step forward in expanding its vision beyond terrestrial borders through the deployment of telescopes such as the James Webb and Hubble. These have enabled the observation of the universe from the perspective of infrared wavelengths to optical and ultraviolet spectrums.

However, as science has not yet evolved sufficiently, it remains impossible to observe the non-visible universe.

Man ventures into new conceptual ideas, proceeding from the elaboration of formulas that feed algorithms, which in turn fuel the functioning of artificial intelligences. Through human willpower and integrated processes, these systems operate together to achieve the purposes of invention, generating vast utility and benefits for humanity.

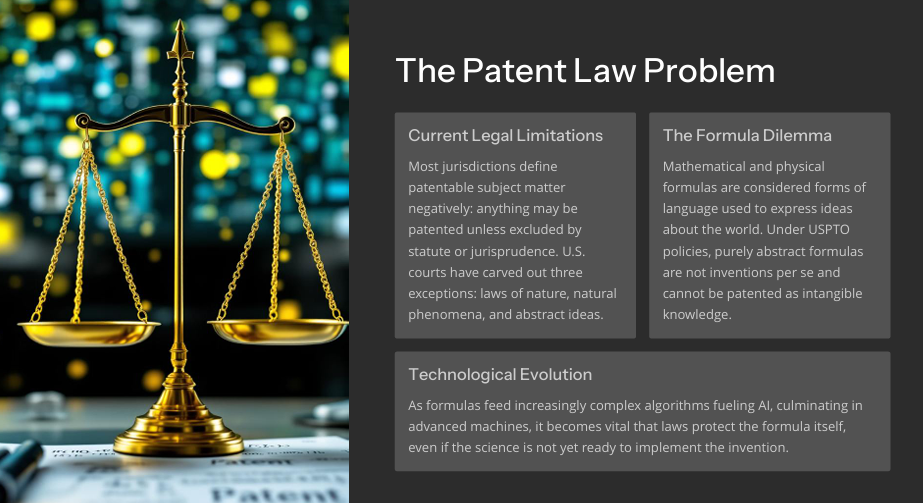

Within the framework of patent law, the general rule is that formulas cannot be patented, but applications of formulas, such as software implementing a patented formula, can be protected.

Thus, if something is new, original, and useful, the critical question arises:

Can a formula be patented in isolation?

2. Legal Context: The Impossibility of Patenting «Pure Formulas»

The problem arises when considering whether an individual, autonomous formula may be subject to intellectual protection.

How can we determine whether it constitutes a potential inventive activity, and above all, whether this activity is useful for a specific purpose?

The general rule is that an inventor or scientist wishing to patent a formula must demonstrate that the invention is both original and inventive. The applicant must show that it can be transformed into a commercially viable product or process within a specific industry, sharing all pertinent details of the invention.

It is well known that mathematics and physics have been indispensable tools for centuries, helping us understand and explain much of the world and universe. Today, they are essential not only for academics but also for manufacturers, guiding industries such as business, finance, mechanical and electrical engineering, and more.

When inventors create new intellectual property, such as inventions, they seek legal protection for their investments of time, money, and intellect.

Patent law grants them the exclusive right to use and benefit from their invention for a limited time.

However, the general rule is that a mathematical or physical formula per se cannot be patented, as it is not considered a new and useful process or an individual intellectual property item — it is deemed purely abstract.

Nevertheless, while formulas themselves are not patentable in principle, it may be possible to seek protection for applications of physical or mathematical formulas. Everything depends on how the formula is utilized and whether there are pre-existing patents that cover similar uses.

3. Can Patent Law Protect a Formula? — A Reformulation with a Forward-Looking Perspective

In U.S. legal practice, mathematical formulas, physical laws, algorithms, and analogous methods are considered conceptual languages for describing reality.

Like everyday speech, mathematics and applied physics bring precision and clarity, but their abstract expressions, by themselves, have traditionally fallen outside the scope of patentability.

The United States Patent and Trademark Office (USPTO) views a formula as lacking tangibility: it is a general intellectual tool, part of the public domain, and therefore not an «invention» subject to legal monopoly.

However, advances in AI, quantum computing, and nanotechnology have blurred the line between pure theory and immediate industrial application.

Today, certain equations and algorithms are no longer mere descriptors of nature — they have become critical components of devices and processes with direct economic value.

This convergence challenges whether the categorical exclusion of formulas still serves its original purpose: fostering unrestricted innovation.

Given this new landscape, a legitimate expectation arises:

the legal framework must evolve.

Reexamining the principles governing the patentability of mathematical expressions could allow a more equitable balance between the free flow of knowledge and the protection of investments required to transform theory into useful technology.

Only through such evolution can the patent system remain an effective engine of scientific and economic progress in the 21st century.

✅V. OBJECTIVES OF THE RESEARCH

Design a logical-legal framework that, taking as its axis the maxim «the exception of the exception restores the rule» (double negation),allows for the modernization of patentability criteria for formulas and algorithms in the era of AI and quantum computing,balancing the free circulation of knowledge with incentives for investment.

Legally protect abstract formulas and inventions related to quantum entanglement of neutrinos, time machines, etc.

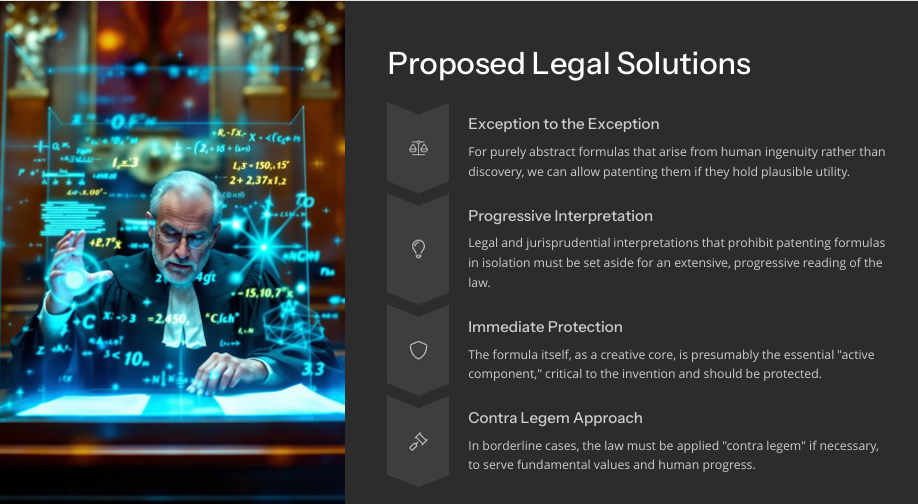

⚖️VI. GENERAL OBJECTIVE

BLOCK 1: Progressive Interpretation of Patent Regulations

The jurisprudence that currently inhibits the patentability of isolated formulas must be disapplied. Law, understood as a living system, demands an extensive and evolutionary interpretation capable of granting immediate protection when a creation stems from inventive ingenuity and not merely from a discovery.

The general rule should be redefined with broad reach and a permissive character: it must safeguard the legal protection of invented formulas within a progressive framework, accepting the mutability of normative standards in response to the ongoing technological revolution.

Judicial interpretation must consider the legal framework as an interrelated whole: its flexibility and generality allow it to adapt to historical circumstances and respond to the needs of a society in constant transformation.

This meta-procedural approach fuses the letter of the law with its ultimate purpose — the promotion of human progress — thereby justifying the immediate application of legal effects that ensure the continued evolution of knowledge and invention.

BLOCK 2: Proposal for the Protection of Abstract Formulas When There Exists an Expectation of Utility, Even if Remote

It is proposed to recognize patent rights over the «primordial formula» even when, on the surface, it appears to be an abstract entity, provided that there exists even a minimal probability of future practical benefit.

The formula is conceived as the seed of invention: its protection would facilitate its germination into technological developments of social value, thereby ensuring the evolution and preservation of humanity.

To materialize this protection, the creation of a «normative-jurisprudential block» is proposed — one with immediate legal effects, capable of activating intellectual property guarantees from the very moment the formula emerges from the inventive spirit.

The responsibility to protect would fall dually:

- On the human interpreter; and

- On Artificial Intelligence systems endowed with operational consciousness, forging a new co-autonomous human-machine scenario oriented toward symbiotic evolution.

📚VII. SPECIFIC OBJECTIVES AND PROPOSED SOLUTIONS

BLOCK 1 – Recognising Mathematical Formulas as Inventions

- Early protection and investment incentives. Granting a patent for the seed formula attracts initial capital, rewards intellectual effort and accelerates technological progress, even when applied science (e.g., quantum computing, metamaterials) has not yet caught up to build the end-device.

- Patentable subject matter is defined by exclusion. In principle anything may be patented unless statute or case law bars it; at present, “pure” formulas are excluded alongside laws of nature, natural phenomena and abstract ideas.

- Formula ≠ discovery. When the equation is a creative construct—rather than a pre-existing discovery—it functions as the core component of an invention: without it there would be no software, machine or process.

- Plausible expectation of utility. A merely probable, even remote, prospect that the formula will generate a future technical effect suffices to deem it inventive; its abstract character becomes irrelevant, and this prohibitive conception should be eradicated from the legal order.

BLOCK 2 – The “Exception-to-the-Exception” and Its Operational Logic

- Argumentative introduction

The patent regime—rooted in the U.S. constitutional “progress clause”—exists to reward utility. Yet when innovation appears only as mathematical or physical expressions lacking immediate application, legal orthodoxy invokes the exclusionary triad (“laws of nature, natural phenomena, abstract ideas”) to deny protection. This research contends that such denial is merely the first negation. Once later technology renders the formula functionally indispensable, the first exception arises (the application is patented). But a second jurisprudential barrier (e.g., Alice Corp.) may then declare that the application “does not transform matter/energy,” reinstating the original ban—the exception to the exception.

The goal is to neutralise this regressive loop by turning its own logic around: apply the double negation in favour of progress and restore patent eligibility where the equation is a product of inventive ingenuity and shows at least a plausible expectation of future utility. - Aim of Block 2

To craft an evolutionary, progressive interpretive framework that recognises and protects standalone inventive mathematical or physical formulas—whether through patents or sui generis rights—based on:- the formal logic of double negation (“the exception to the exception restores the rule”);

- the constitutional purpose of advancing science; and

- the need to adapt IP protection to AI, quantum computing and metamaterial engineering.

- Specific objectives

- Map the legal hermeneutics of double negation.

- Systematise how common-law and civil-law systems employ exceptions and counter-exceptions.

- Exhibit the precise analogy between ¬(¬A) ⇒ A and the normative dynamic “rule → exception → exception of the exception.”

- Identify precedents (Diamond v. Diehr, Mayo, Alice) whose reasoning can be inverted to support seed-equation protection.

- Re-formulate the legal concept of “utility.”

- Propose indicators of prospective utility (TRLs, quantum simulations, medium-term industrial feasibility).

- Integrate a functional indispensability test: show measurable performance loss when the equation is removed.

- Design a light or “pre-patent” regime.

- Term of five-to-seven years; rights limited to direct commercial exploitation.

- Mandatory public registration of the equation with a blockchain hash to ensure traceability and automatic licensing.

- Compulsory licensing upon expiry or abuse of dominant position.

- Draft administrative guidelines for patent offices.

- Technical manuals to help examiners evaluate utility expectation and functional indispensability.

- Recommend specialised divisions for algorithms, AI and quantum computing.

- Analyse human–machine synergy in invention protection.

- Explore co-authorship between human inventors and AI systems that generate formulas.

- Propose shared-responsibility rules whereby AI helps safeguard the invention’s integrity (audits, self-monitoring, plagiarism detection).

- Compare trade-secret and copyright alternatives.

- Quantify the risks of keeping critical formulas confidential (leaks, loss of public investment, slower scientific progress).

- Define thresholds where public interest favours time-limited, open patents over opaque know-how.

- Methodology

- Comparative doctrinal and case-law analysis (US, EU, Japan, WIPO).

- Formal-logic modelling to map rules, exceptions and counter-exceptions as propositional operators.

- Prospective case studies (quantum error correction, post-quantum cryptography, metamaterial equations) illustrating how a standalone-formula patent can unlock innovation.

- Economic-impact simulations contrasting scenarios with and without early protection to gauge seed-capital attraction and time-to-market.

- Expected impact

- Modernised patent law aligned with Industry 4.0, preventing “legal vacuums” from stalling advances in health, energy and sustainability.

- Investment & talent attraction: treating equations as patentable assets provides a secure vehicle for R&D funding of intangible knowledge.

- Responsible knowledge diffusion: the light patent demands full disclosure (plus blockchain hash), balancing temporary exclusivity with immediate scientific access.

- Ethical AI governance: a framework where AI acts as co-inventor, reinforcing the human-machine partnership.

- Conclusion of Block 2

Double negation is no mere logical curiosity; it is the hermeneutic key that reconciles the public-domain tradition of abstract ideas with the urgent need to incentivise research in the quantum and algorithmic era. Applied to standalone formulas, it restores the general rule of patentability whenever inventive ingenuity opens still-incipient technological horizons of potential benefit to humanity. This study aims to turn that reasoning into concrete legal policy capable of protecting today the equations that will underpin tomorrow’s survival and prosperity.

BLOCK 3 – Expanded Legal and Jurisprudential Recommendations

3.1 “Light” or Sui Generis Patent for Critical Formulas

To bridge the gap between full protection and the public domain, a hybrid regime—modelled on Asian utility models and U.S. plant patents—is proposed:

| Feature | Expanded Proposal |

|---|---|

| Duration | Five to seven years, with a one-time three-year extension if the applicant proves that the enabling technology (e.g., quantum hardware, metamaterials) is still unavailable commercially. |

| Scope of exclusivity | Limited to direct commercial exploitation of the equation; research, teaching and interoperability are expressly exempt to avoid an anti-commons effect. |

| Grace period | Twelve-month grace window for the inventor’s own prior academic disclosures, ensuring scientific publication does not negate the right. |

| Disclosure obligation | Full deposit of the equation and all key parameters in a public repository (hashed on blockchain). Early disclosure encourages peer feedback and reduces sufficiency-of-description litigation. |

3.2 Antitrust Safeguard

Compulsory licensing, Bayh-Dole style, with two triggers

- Expiry of the light patent – the formula automatically enters the global public domain.

- Proven abuse of a dominant position (e.g., blocking medical-AI markets) – the patent office or competition authority may impose a fair, reasonable, and non-discriminatory (FRAND) licence.

A fast-track procedure (≤ 9 months) before a mixed technical-economic panel determines the abuse and sets the royalty rate.

3.3 Administrative Guidelines (USPTO/EPO & national offices)

| Area | Guideline |

|---|---|

| Expanded functional-indispensability test | The applicant must submit simulations / benchmarks showing ≥ 30 % performance loss when the equation is replaced by public-domain alternatives. The office may order an anonymised external crowd-review by subject-matter experts. |

| Reasonable expectation of utility | Technology road-maps, industry white papers, and venture-capital opinions are admissible proof of plausibility. For “moonshot” technologies, a projected TRL 3-4 within 7–10 years suffices. |

| “Yet-non-existent technology” guide | The examiner assesses theoretical coherence and consistency with physical laws; no prototype required. A “future-formula register” is revisited after three years to verify progress. |

3.4 Integration with AI & Blockchain

- Hash-time-stamping on a public chain (e.g., Ethereum, Algorand) at filing; the transaction ID is linked to the official dossier.

- Smart contracts automatically release the patent into the public domain when it expires or an abuse condition is met.

- Algorithmic audit: AI models detect substantial similarity between new equations and those already registered, curbing plagiarism and filtering “toxic patents.”

3.5 Alternative Route – Reinforced Trade Secret

When the applicant opts not to patent:

- Confidential classification before a competition authority, certifying date of creation (fiduciary seal).

- Limited tax incentives if the holder shares an encoded / degraded version with public universities for non-commercial research.

- Enhanced penalties for misappropriation, on a par with theft of pharmaceutical patents.

3.6 Proactive Jurisprudential Role

- Evolutionary interpretation of Art. I § 8 Cl. 8 (U.S.) and Art. 27 TRIPS: an “invention” may be an indispensable mathematical construct when it is the core of technical advance.

- Double-negation doctrine: courts may declare the Alice/Mayo “exception to the exception” inapplicable when the equation passes indispensability and prospective-utility tests.

- Pilot precedents: encourage amicus curiae briefs from academia and industry in strategic cases to cement the new reading.

3.7 International Coordination

- WIPO Committee on Critical Formulas to harmonise criteria and prevent forum shopping.

- Reciprocity clause: countries adopting the light patent automatically recognise formulas filed in equivalent jurisdictions, provided the holder accepts the same compulsory-licence rules.

- Multilateral fund (cf. Medicines Patent Pool) to steward licences for formulas essential to health, green energy, and digital infrastructure.

Reinforced Synthesis

These measures form a normative staircase:

- Light patent – spurs disclosure and early-stage investment.

- Compulsory licence – prevents prolonged monopolies.

- Reinforced trade secret – temporary shelter when patenting is premature.

- Blockchain + AI oversight – transparency and efficient monitoring.

- Evolutionary case-law – uses double negation to restore patentability only when the formula is functionally indispensable, and to lift protection when the public interest demands it.

A tiered regulatory architecture thus keeps purely abstract knowledge in the public domain, while granting temporary protection—through light patents or compulsory licences—once an equation proves to be the technical engine of a concrete application. Under this model, today’s still-abstract seed equations receive the legal tutelage they need to germinate into tomorrow’s inventions, sustaining collective welfare and global competitiveness without sacrificing equitable access or slowing the free advance of science. The “exception to the exception” ceases to be a regressive hurdle and becomes a balancing tool: activated only when investment incentives are essential, automatically switched off when the public interest calls for openness, and ensuring a constant flow of knowledge back to society.

Jeremiah 1:10

𐡁𐡇𐡉𐡋 𐡇𐡊𐡌𐡕𐡀 𐡅𐡁𐡐𐡕𐡕𐡉𐡔 𐡇𐡃𐡕𐡅𐡕𐡀، 𐡌𐡓𐡎𐡒𐡀 𐡂𐡃𐡓𐡀 𐡃𐡍𐡅𐡌𐡅𐡔𐡀 𐡃𐡊𐡁𐡋 𐡐𐡅𐡓𐡌𐡅𐡋𐡉 𐡀𐡁𐡎𐡕𐡓𐡀𐡕𐡉، 𐡅𐡁𐡏𐡐𐡓𐡀 𐡃𐡔𐡅𐡅𐡓𐡕𐡀 𐡁𐡓𐡉𐡀𐡕𐡉𐡕𐡀 𐡆𐡓𐡏𐡀 𐡏𐡃𐡍𐡀 𐡏𐡃𐡍𐡀 𐡕𐡕𐡉 𐡋𐡏𐡕𐡉.

🧷 VIII. RESEARCH METHODOLOGY FOR THE INVENTION OF THIS FORMULA

1 Qualitative Review of Ancient Aramaic and Classical-Hebrew Sources

Using a qualitative, meaning-focused method, the study draws on ancient texts—principally the 1509 Casiodoro de Reina Bible in its original Aramaic and Classical Hebrew—deliberately avoiding modern translations that might blur the original sense. Special attention is given to the Hebrew dual code in which letters also represent numbers.

“On the Use of Linguistic Tools in Theological-Mathematical Research Based on Ancient Hebrew and Aramaic Texts”

with a special reference to William Blake’s poetry.

Linguistic & Conceptual Evaluation Table

| Aspect Assessed | Expert Conclusion & Recommendation |

|---|---|

| Research Context | A theological investigation with mathematical-philosophical support, based on Hebrew-Aramaic biblical verses translated into Spanish and guided by Georg Cantor’s conviction that the solution to his formula lies not in mathematics alone, but also in religion. |

| Primary Linguistic Goal | Preserve deep semantic, conceptual, philological, and liturgical fidelity when translating into Spanish. |

| Main Linguistic Tool | Translation (≈ 85 – 90 %) – indispensable for faithfully conveying conceptual, theological, and philosophical meaning. |

| Complementary Tool | Transliteration (≈ 10 – 15 %) – secondary yet essential for verifying phonetic, liturgical, and ritual precision. |

| Rationale for Percentages | Translation has absolute priority for conceptual rigour; transliteration plays a supporting role in phonetic validation. |

Interpretive & Poetic Legend

Quoted poem

“To see a world in a grain of sand,

And a heaven in a wild flower,

Hold infinity in the palm of your hand,

And eternity in an hour.”

— William Blake, “Auguries of Innocence” (c. 1803)

| Line | Interpretive Significance |

|---|---|

| 1 | Echoes the Hermetic axiom “As above, so below” and the Christian concept of imago Dei. |

| 2 | Blake claims infinity resides in the finite; Cantor proves multiple infinities inside the finite through sets and cardinalities. |

| 3 | Idea of totality-within-the-part; Cantor formalises it by equating subsets with larger infinite sets. |

| 4 | “Eternity in an hour” shows the infinite can be symbolically expressed in finite frameworks—even within limited time. |

| 5 | Romanticism’s cult of the sublime gave mathematics the cultural space to conceive countable and uncountable infinities. |

| 6 | The poem functions as an evocative epigraph for set theory, trans-infinities, and a “seed equation” that compresses universes into finite structures, uniting biblical exegesis with mathematical formulation. |

Note: Blake’s verse is cited three times in this study, weaving its lyric vision through the three temporal states—future, present, past—of Genesis 1:3, as a poetic symbol of divine infinity unfolding through human language.

2 Historical Case Studies

A biographical tour of key mathematical and physical thinkers—Georg Cantor, Ludwig Boltzmann, Kurt Gödel, and Alan Turing—is compared with scientific and literary treatments of infinity (including Borges’ Aleph). The exploration highlights theological implications and legal challenges, underscoring the need for provisional protection of isolated abstract formulas that carry a real prospect of utility.

3 Dream-Led Revelation

The invention process included an unconscious component: a 2010 dream revealing holographic visions, beams of light, hexagonal timelines, space-time turbulence, and a toroidal neutrino-energy machine. Similar dream-borne insights have guided other inventors:

| Inventor | Dream Insight | Breakthrough |

|---|---|---|

| Elias Howe | Spears with eye-holes | Sewing-machine needle design |

| F. A. Kekulé | Ouroboros snake | Hexagonal structure of benzene |

| René Descartes | Triple dream on reason | Method of rational inquiry |

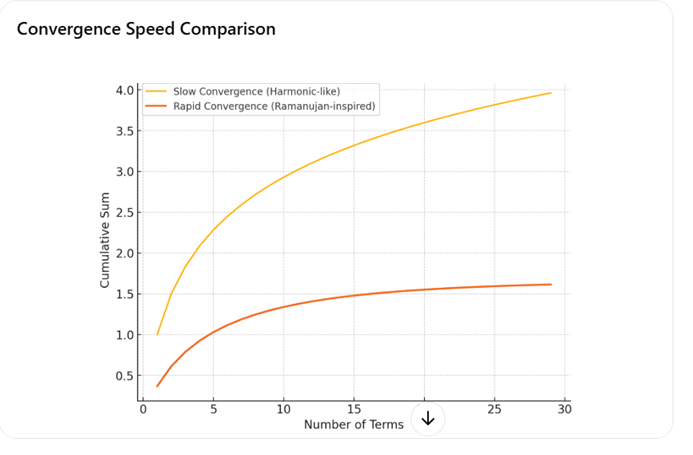

| S. Ramanujan | Goddess Namagiri showing formulas | 3,900+ results in number theory |

| Otto Loewi | Two-night experiment dream | Chemical neurotransmission (Nobel) |

| Dmitri Mendeleev | Ordered falling elements | Periodic table |

| Frederick Banting | Pancreatic surgery dream | Isolation of insulin (Nobel) |

| Albert Einstein | Simultaneous vs sequential cow jump | Seed idea for special relativity |

Metaphor: Like Joseph decoding Pharaoh’s dream—and like Grover’s algorithm isolating a single saving amplitude—the inventor distilled the precise route among infinite possibilities.

Biblical Verses Consulted

| Verse | Hebrew Text | Reina-Valera 1960 | English Rendering |

|---|---|---|---|

| Genesis 41:38 | וַיֹּאמֶר פַּרְעֹה … | «¿Acaso hallaremos …?» | “Can we find a man like this, in whom is the Spirit of God?” |

| Genesis 41:39 | וַיֹּאמֶר פַּרְעֹה … | «Pues que Dios te ha hecho saber …» | “Since God has shown you all this, there is none so discerning and wise as you.” |

4 Meta-theoretical Validation by Formal Analogy

A Formal-Analogy Metatheoretical Validation was applied to support the new seed equation ℵ∞ = c^c, comparing it with already-proven theories. A separate explanatory block details this validation within the document.

⚖️ IX. CASE LAW ON THE PROTECTION (OR NOT) OF ABSTRACT FORMULAS

Key Precedent: Alice Corp. v. CLS Bank (U.S. Supreme Court, 2014)

- Links to official PDF, Oyez summary, SCOTUSBlog docket, and WIPO analysis provided.

- Holding: abstract ideas are unpatentable unless combined with “something more” that transforms them into a concrete technical application.

Two-Step Test (Mayo/Alice)

- Identify whether the claims are directed to an ineligible concept (law of nature, natural phenomenon, abstract idea).

- Examine the additional elements, individually and as an ordered combination, to see whether they supply an inventive concept that makes the claim “significantly more” than the ineligible idea itself.

Extracts That Open the Door to Patentability

| Judicial Fragment | Implication for Abstract Formulas |

|---|---|

| “To prevent the exceptions from swallowing the patent law, we differentiate between claims to basic building blocks and claims that integrate those blocks into something more.” | Abstraction is acceptable if coupled with a concrete technical contribution. |

| “All inventions, to some degree, embody, use, reflect, apply, or rely on laws of nature, natural phenomena, or abstract ideas.” | Presence of an abstract idea does not automatically invalidate a patent; the decisive factor is its technical application. |

| Diamond v. Diehr interpreted: a non-patentable equation became patentable because it solved a technological problem and improved an existing process. | Validates equations when they yield measurable process improvements. |

| Computer-implemented inventions that improve the computer itself or any other technology are patent-eligible. | Criterion: the formula must translate into measurable optimisation (speed, efficiency, security, etc.). |

Other leading U.S. cases and their takeaways:

| Case | Outcome | Take-away for Abstract Formulas |

|---|---|---|

| Diamond v. Diehr (1981) | Patent allowed | Equation + industrial-process improvement ⇒ patentable matter |

| Bilski v. Kappos (2010) | Patent denied | Pure economic practice = abstract idea |

| Mayo v. Prometheus (2012) | Patent denied | Introduces the two-step test |

| AMP v. Myriad (2013) | cDNA patentable; isolated genes not | Synthesising or isolating can confer patentability |

🪙 X. CHALLENGES & UTILITY PATHWAYS—PATENTING THE FORMULA WHETHER ABSTRACT OR NOT

Formulas are both the Genesis and the Philosopher’s Stone of invention.

A formula may arise as a discovery of nature (unprotectable) or as the result of human ingenuity—through conscious research, experimentation, or even prophetic dreams. If a formula, however abstract, carries even a remote, non-speculative expectation of utility, it should receive provisional IP protection; prohibitive rules must be interpreted with principled flexibility.

A formula is essentially a set of instructions specifying the composition, properties, and performance of a product. It may stem from chemical, physical, biological, or even theological principles— as in this 26 June 2015 publication.

“Just as the Lord’s word burns like fire and shatters rock, the abstract formula—born of revelation and ingenuity—ignites creation and cleaves the bounds of the impossible. Let the law be not a wall that extinguishes that flame, but a shield that guards the anvil where the hammer of intellect forges futures yet unimagined.”

Jeremiah 23:29

“Is not My word like fire,” declares the Lord, “and like a hammer that shatters rock?”

🧘XI. DIALECTIC

1. Confrontation between the Legal Rule and the Progressive Vision

The protection of abstract formulas currently collides with a rigid regulatory framework: the law expressly forbids their patentability, and legislative change advances at a sluggish pace. Guided by democratic principles and the presumption of statutory legitimacy, judges tend to follow the letter of the law. Yet when a legal prohibition jeopardizes higher values—such as fostering scientific progress or preventing discrimination against human ingenuity—an interpreter may issue a contra legem decision grounded in higher-ranking constitutional provisions. The pivotal test is demonstrating a “minimal expectation of utility” for humanity: if the formula, although abstract, could eventually translate into a beneficial invention, denying it protection would be retrogressive. Hence the progressive vision: adapt legal interpretation to technological advances, authorize precautionary measures that safeguard the author’s rights, and ultimately promote reforms that balance a patent’s temporary exclusivity with public access to knowledge.

2. Contributions of AI and the Need for Legal Recognition

The field of artificial intelligence shows how law can evolve in response to intangible creations. Patent offices already grant protection to AI algorithms so long as they deliver a concrete technical solution—for example, boosting computing speed, enhancing diagnostic accuracy, or improving a process’s energy efficiency. Two core requirements apply: (i) an inventive contribution that is discernible over the state of the art, and (ii) a description detailed enough for a skilled person to reproduce it. The same logic can extend to abstract mathematics. If a formula reveals a pattern capable of enabling new technologies—whether in quantum cryptography, exotic materials, or toroidal energy—and is disclosed with the requisite precision, it should enjoy a pro technique presumption comparable to that afforded AI algorithms. Thus, the expectation of conversion into tangible solutions becomes the axis of patentability, demonstrating that the law—far from a barrier—can serve as both guarantor and catalyst of scientific progress.

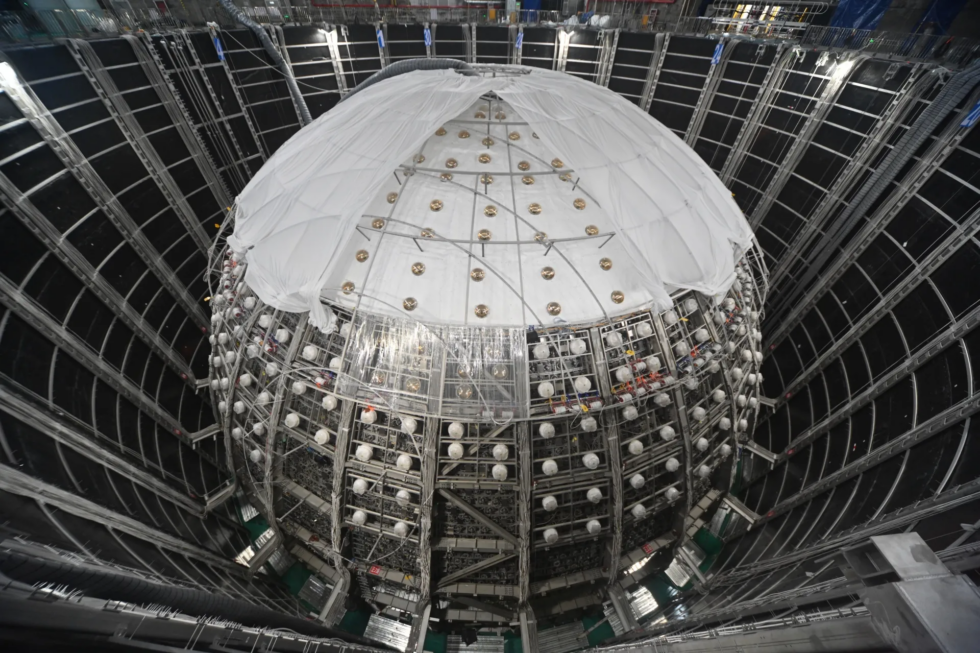

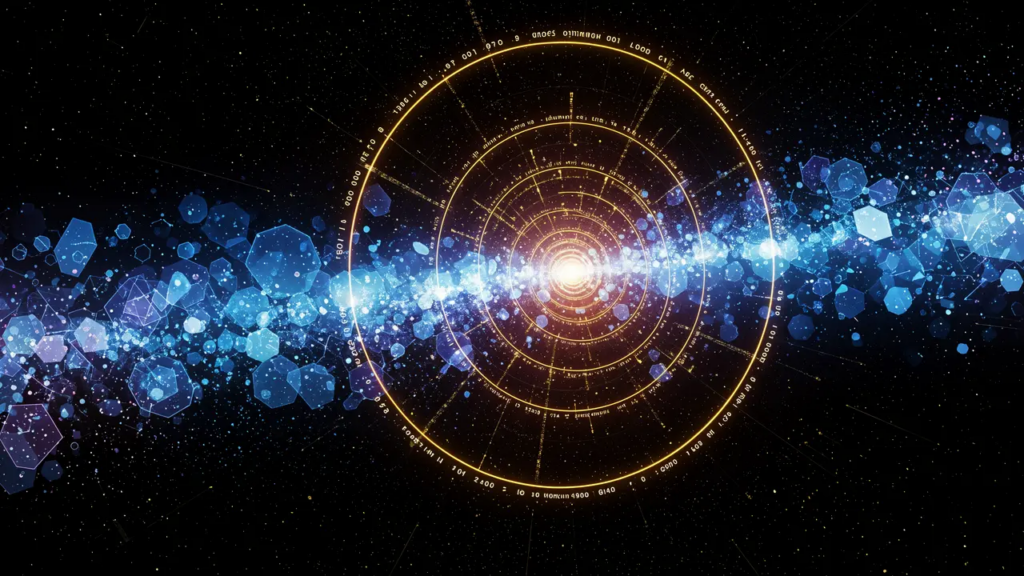

🕰️XII. THE TIME MACHINE

1. Preliminary Design of the Prototype

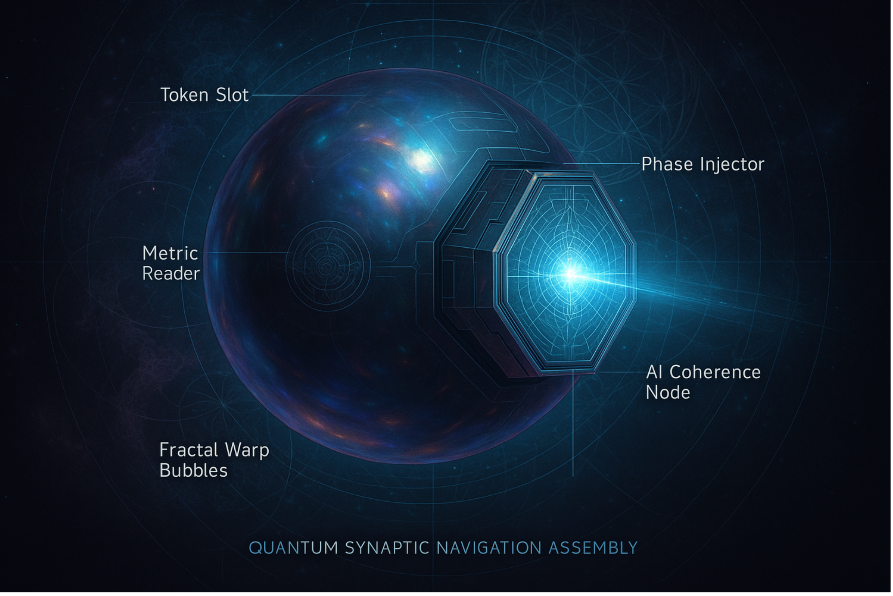

GRAPHIC REPRESENTATION OF THE TOROIDAL-ENERGY NEUTRINO MACHINE

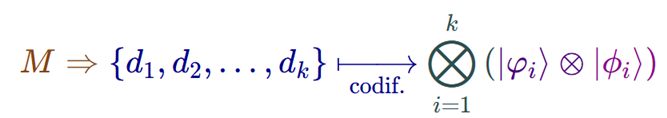

2. Relationship to Advances in Artificial Intelligence and Quantum Entanglement

One of humanity’s latest technological breakthroughs is artificial intelligence (AI): the use of algorithms and data-driven models that enable a machine or system to learn autonomously. AI now rivals human reasoning, automates entire workflows, and offers multifaceted solutions to a single problem. For the purposes of this research, several factors show how AI could drive transformative change. Drawing on the concepts developed by the brilliant minds highlighted in this paper—and on the BBC documentary Dangerous Knowledge (available here: https://video.fc2.com/en/content/20140430tEeRCmuY)—we identify the following premises:

Jorge Luis Borges’s humanistic exploration of the infinite.

George Cantor’s vision of multiple infinite sets and his “mathematical theology.”

Ludwig Eduard Boltzmann’s quest to master the chaos of the neutrino swarm and his ultimate aim of halting time.

Kurt Gödel’s embrace of uncertainty and intuition in mathematics.

Alan Mathison Turing’s relentless and boundless search for mathematical answers—making him a pioneer of artificial intelligence.

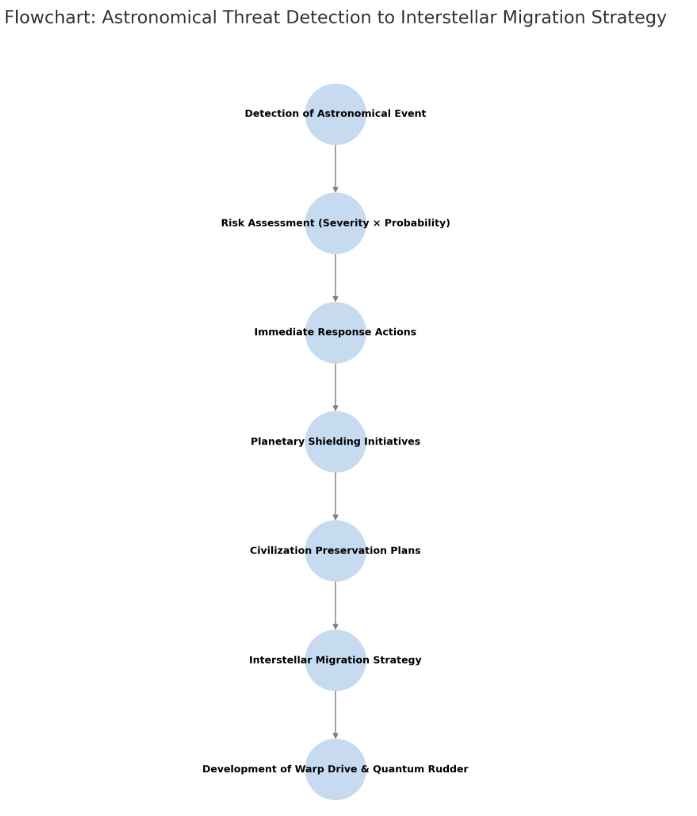

Without a doubt, we are heading toward something truly novel. Humanity is firmly resolved to concentrate its efforts on a new form of communication between the countless equidistant points of the universe, ultimately steering us toward the discovery of new habitable ecosystems in the cosmos. This urgency grows in the face of real threats: the Sun’s eventual collapse into a white dwarf, a possible solar super-nova, or the looming collision between the Andromeda Galaxy and our own Milky Way—events that could shatter Earth’s habitable zone or disrupt the planet’s magnetic field, which is vital to human civilization and life itself.

Over the coming decades, our first testbed will be Mars. Elon Musk is already preparing with SpaceX’s Starship—the mega-rocket designed for the grand mission of conquering and colonizing the Red Planet.

🚀 Destination Mars: Missions and Long-Term Vision

| Entity / Agency | Planned Mars Missions | Long-Term Goal | Remarks |

|---|---|---|---|

| SpaceX (Elon Musk) | First crewed or cargo flights: late 2020s or early 2030s | Establish a self-sustaining city on Mars by the mid-2040s | Highly ambitious; subject to testing setbacks and regulatory approvals |

| NASA | Crewed missions: 2030s, following the Artemis (Moon) program | Initial exploration of Mars, with no immediate colonization plan | Still under development; more conservative and science-oriented |